The purpose of this article is to give you a detailed view of how easy to do storage migration or disk changes for your delphix engine.

As prerequisite you will need to create and attach the new disks to your engine.

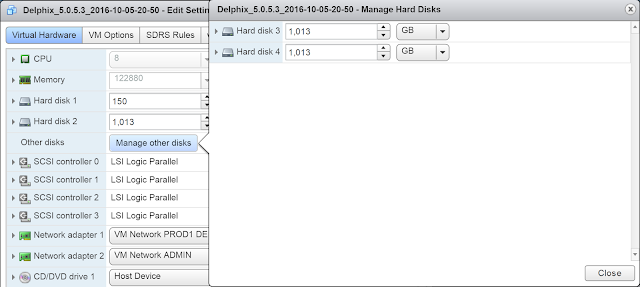

Here i'm showing the list of my old storage disks

After creating and attaching the new storage disks it looks like

Notice the new set of data disks with 1010GB size

Now, connect to the server admin console as "sysadmin" user and assign the new set of disks to delphix appliance.

After assigning disks it will look like

After, I'm connecting to the engine as "sysadmin" user and navigate to "storage device" in order to initiate storage migration operation

LandsharkEngine storage device> ls Objects NAME CONFIGURED SIZE EXPANDABLESIZE Disk3:0 false 1013GB - Disk10:2 true 1010GB 0B Disk10:1 true 1010GB 0B Disk1:0 false 1013GB - Disk10:0 true 150GB 0B Disk10:3 true 1010GB 0B Disk2:0 false 1013GB - Operations initialize LandsharkEngine storage device>

I have to remove all disks with 1013GB size (old storage disks).

Select the concerned disks to check out the configuration information’s including the vmdk UUID

LandsharkEngine storage device> select Disk3:0 LandsharkEngine storage device 'Disk3:0'> ls Properties type: ConfiguredStorageDevice name: Disk3:0 bootDevice: false configured: true dataNode: DATA_NODE-1 expandableSize: 0B model: Virtual disk reference: STORAGE_DEVICE-6000c29a0882955c48d7c799b40403fb-DATA_NODE-1 serial: 6000c29a0882955c48d7c799b40403fb size: 1013GB usedSize: 1.64GB vendor: VMware Operations configure expand remove removeVerify LandsharkEngine storage device 'Disk3:0'>

Check out the removal status simulation and memory/storage size usage

LandsharkEngine storage device 'Disk3:0'> removeVerify LandsharkEngine storage device 'Disk3:0' removeVerify *> commit type: StorageDeviceRemovalVerifyResult newFreeBytes: 4.92TB newMappingMemory: 13.70MB oldFreeBytes: 5.90TB oldMappingMemory: 0B LandsharkEngine storage device 'Disk3:0'>

Now, will be removing the disk as all verification are ok

LandsharkEngine storage device 'Disk3:0'> remove LandsharkEngine storage device 'Disk3:0' remove *> commit Dispatched job JOB-855

Repeat the same action for the other disks (Disk2:0 and Disk1:0)

After disk remove operation there assignment status changes to “Unassigned”

As the same as from CLI (configured status if false)

LandsharkEngine storage device> ls Objects NAME CONFIGURED SIZE EXPANDABLESIZE Disk3:0 false 1013GB - Disk10:2 true 1010GB 0B Disk10:1 true 1010GB 0B> Disk1:0 false 1013GB - Disk10:0 true 150GB 0B Disk10:3 true 1010GB 0B> Disk2:0 false 1013GB - Operations initialize LandsharkEngine storage device>

Once evacuation is complete the following fault is generated

To fix the fault detach the disks from your hypervisor and the fault will clear automatically

![HOW DELPHIX HELPS ANSWER COMPLIANCE REGELATORY [Part-I]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEgs8fiyxWTbh7XbbB9cz8Fg6e7jUj2bgZofl4X29Lz4ykzIKGDHm9vzBjxRq-SO7pYdzBshpbPjaM0nfHLXIsJlRa3_bOde6estlgoYGXVrVE4xcqApZlaAzUjP0_k6XPqurd9nrJXRZfxX/s72-c/pic1.png)

No comments:

Post a Comment