[root@linuxsource ~]# yum install -y java-1.8.0-openjdk.x86_64Create ssh key on localhost and set permissions.

[root@linuxsource ~]# ssh-keygen -t rsa -P "" Generating public/private rsa key pair. Enter file in which to save the key (/root/.ssh/id_rsa): Your identification has been saved in /root/.ssh/id_rsa. Your public key has been saved in /root/.ssh/id_rsa.pub. The key fingerprint is: 2f:4c:14:55:c6:89:a9:cc:54:4e:a1:fd:43:d4:7f:8d root@linuxsource.delphix.local The key's randomart image is: +--[ RSA 2048]----+ | .o=*+o | | .*ooo . | | +o.o . o.| | .+ o E +| | S o .| | o . . | | o . | | . | | | +-----------------+ [root@linuxsource ~]# cat ~/.ssh/id_rsa.pub >> ~/.ssh/authorized_keys [root@linuxsource ~]# ssh localhost The authenticity of host 'localhost (127.0.0.1)' can't be established. RSA key fingerprint is f3:9a:e9:88:be:b9:f9:16:71:35:0f:73:d7:18:86:cf. Are you sure you want to continue connecting (yes/no)? yes Warning: Permanently added 'localhost' (RSA) to the list of known hosts. Last login: Mon Aug 17 07:57:25 2020 from 192.168.247.1 [root@linuxsource ~]# exit [root@linuxsource ~]# ssh localhost Last login: Mon Aug 17 08:04:10 2020 from localhost [root@linuxsource ~]# chmod 600 ~/.ssh/authorized_keysNow it's time to install HADOOP

wget https://www-us.apache.org/dist/hadoop/common/stable/hadoop-3.2.1.tar.gz tar -zxvf hadoop-3.2.1.tar.gz mv hadoop-3.2.1 hadoopUpdate delphix user profile environment (.bashrc or .profile)

export JAVA_HOME=/usr/lib/jvm/java-1.8.0-openjdk-1.8.0.201.b09-1.el6_10.x86_64/jre ## Change it according to your system export HADOOP_HOME=/u02/hadoop ## Change it according to your system export HADOOP_INSTALL=$HADOOP_HOME export HADOOP_MAPRED_HOME=$HADOOP_HOME export HADOOP_COMMON_HOME=$HADOOP_HOME export HADOOP_HDFS_HOME=$HADOOP_HOME export HADOOP_YARN_HOME=$HADOOP_HOME export HADOOP_COMMON_LIB_NATIVE_DIR=$HADOOP_HOME/lib/native export PATH=$PATH:$HADOOP_HOME/sbin:$HADOOP_HOME/binUpdate HADOOP envirnoment file $HADOOP_HOME/etc/hadoop/hadoop-env.sh with JAVA_HOME variable.

<configuration>

<property>

<name>fs.defaultFS</name>

<value>hdfs://linuxsource.delphix.local:9000</value>

</property>

</configuration>

<configuration>

<property>

<name>dfs.replication</name>

<value>1</value>

</property>

<property>

<name>dfs.name.dir</name>

<value>file:///u02/hadoop/hadoopdata/hdfs/namenode</value>

</property>

<property>

<name>dfs.data.dir</name>

<value>file:///u02/hadoop/hadoopdata/hdfs/datanode</value>

</property>

</configuration>

Create NameNode and DataNode directories your HADOOP will be using

mkdir -p /u02/hadoop/hadoopdata/hdfs/{namenode,datanode}

Modify $HADOOP_HOME/etc/hadoop/mapred-site.xml file<configuration>

<property>

<name>yarn.app.mapreduce.am.env</name>

<value>HADOOP_MAPRED_HOME=$HADOOP_HOME</value>

</property>

<property>

<name>mapreduce.map.env</name>

<value>HADOOP_MAPRED_HOME=$HADOOP_HOME</value>

</property>

<property>

<name>mapreduce.reduce.env</name>

<value>HADOOP_MAPRED_HOME=$HADOOP_HOME</value>

</property>

</configuration>Edit $HADOOP_HOME/etc/hadoop/yarn-site.xml file<configuration>

<property>

<name>yarn.nodemanager.aux-services</name>

<value>mapreduce_shuffle</value>

</property>

</configuration>

$hdfs namenode -format ... 2020-08-17 09:58:23,066 INFO common.Storage: Storage directory /u02/hadoop/hadoopdata/hdfs/namenode has been successfully formatted. 2020-08-17 09:58:23,175 INFO namenode.FSImageFormatProtobuf: Saving image file /u02/hadoop/hadoopdata/hdfs/namenode/current/fsimage.ckpt_0000000000000000000 using no compression 2020-08-17 09:58:23,348 INFO namenode.FSImageFormatProtobuf: Image file /u02/hadoop/hadoopdata/hdfs/namenode/current/fsimage.ckpt_0000000000000000000 of size 402 bytes saved in 0 seconds . 2020-08-17 09:58:23,362 INFO namenode.NNStorageRetentionManager: Going to retain 1 images with txid >= 0 2020-08-17 09:58:23,373 INFO namenode.FSImage: FSImageSaver clean checkpoint: txid=0 when meet shutdown. 2020-08-17 09:58:23,373 INFO namenode.NameNode: SHUTDOWN_MSG: /************************************************************ SHUTDOWN_MSG: Shutting down NameNode at linuxsource/192.168.247.133 ************************************************************/Start ResourceManager and NodeManager daemons

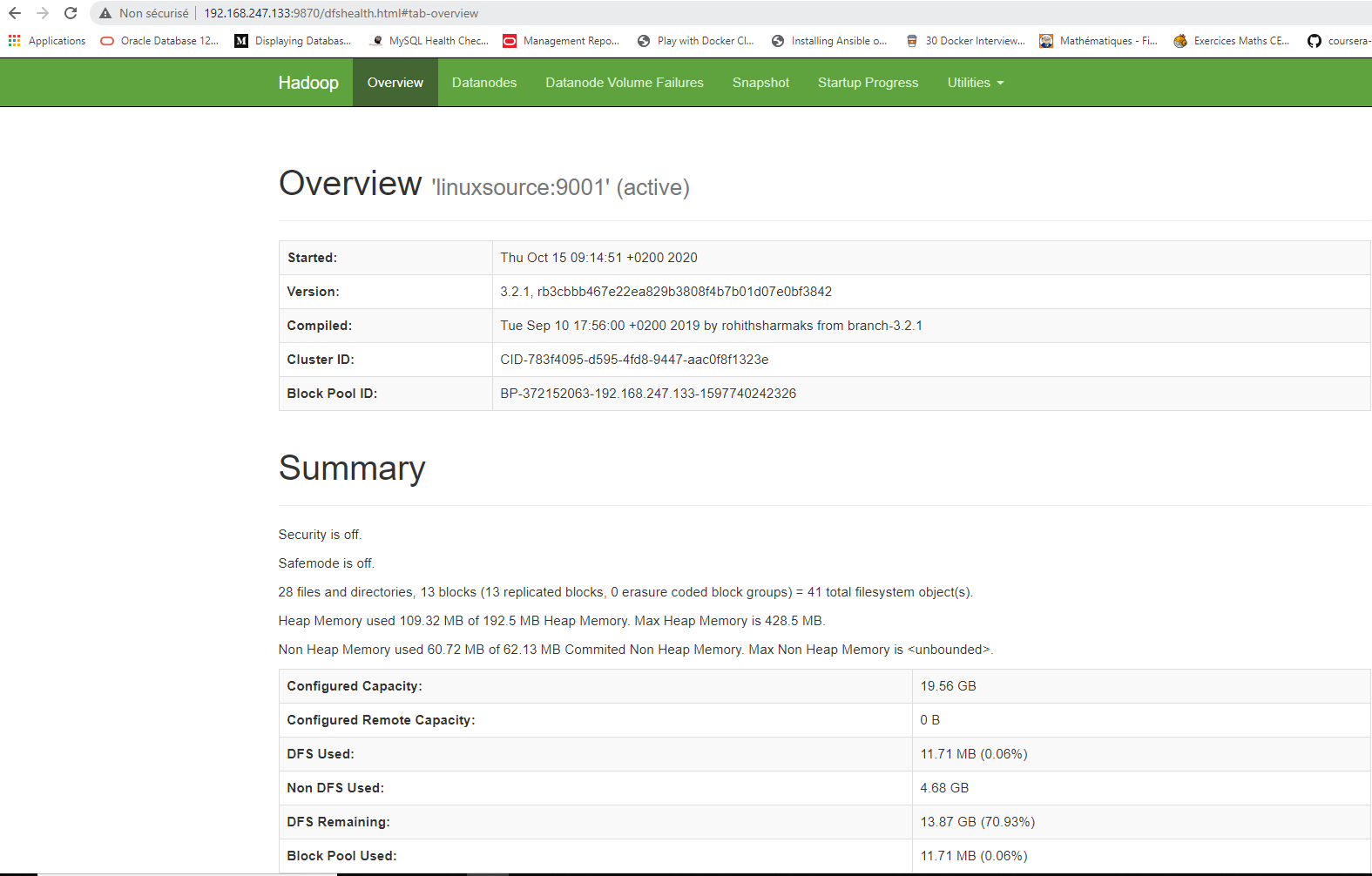

$HDOOP_HOME/sbin/start-hdfs.sh $HDOOP_HOME/sbin/start-yarn.shLet's browse the NameNode from a web browser, connect to http://<yourhost>:9870

Before carrying out the upload to test our cluster, let us create first a directory into HDFS.

[delphix@linuxsource ~]$ hdfs dfs -mkdir /msa 2020-08-17 10:58:44,648 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicableTry uploading a file into msa HDFS directory and check the content.

[delphix@linuxsource ~]$ hdfs dfs -put ~/.bashrc /msa 2020-08-17 10:58:59,552 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable 2020-08-17 10:59:01,340 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false [delphix@linuxsource ~]$ [delphix@linuxsource ~]$ hadoop fs -ls / 2020-08-17 11:02:35,611 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable Found 1 items drwxr-xr-x - delphix supergroup 0 2020-08-17 10:59 /msa [delphix@linuxsource ~]$ hadoop fs -ls /msa 2020-08-17 11:02:41,961 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable Found 1 items -rw-r--r-- 1 delphix supergroup 952 2020-08-17 10:59 /msa/.bashrc [delphix@linuxsource ~]$ [delphix@linuxsource ~]$ hadoop fs -ls hdfs://192.168.247.133:9001/ 2020-08-17 11:03:48,023 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable Found 1 items drwxr-xr-x - delphix supergroup 0 2020-08-17 10:59 hdfs://192.168.247.133:9001/msa [delphix@linuxsource ~]$

Great, I will now try to perform some MapReduce jobs, some examples are available as jars (hadoop-mapreduce-examples.jar) in your $HADOOP_HOME

Compute PI value and workcount

[delphix@linuxsource logs]$ hadoop jar /u02/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.2.1.jar pi 2 5

Number of Maps = 2

Samples per Map = 5

2020-08-18 05:27:10,741 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2020-08-18 05:27:11,596 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

Wrote input for Map #0

2020-08-18 05:27:11,739 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

Wrote input for Map #1

Starting Job

2020-08-18 05:27:11,839 INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:8032

2020-08-18 05:27:12,204 INFO mapreduce.JobResourceUploader: Disabling Erasure Coding for path: /tmp/hadoop-yarn/staging/delphix/.staging/job_1597742243565_0003

2020-08-18 05:27:12,255 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

2020-08-18 05:27:12,339 INFO input.FileInputFormat: Total input files to process : 2

2020-08-18 05:27:12,358 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

2020-08-18 05:27:12,384 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

2020-08-18 05:27:12,392 INFO mapreduce.JobSubmitter: number of splits:2

2020-08-18 05:27:12,527 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

2020-08-18 05:27:12,551 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1597742243565_0003

2020-08-18 05:27:12,551 INFO mapreduce.JobSubmitter: Executing with tokens: []

2020-08-18 05:27:12,755 INFO conf.Configuration: resource-types.xml not found

2020-08-18 05:27:12,756 INFO resource.ResourceUtils: Unable to find 'resource-types.xml'.

2020-08-18 05:27:12,834 INFO impl.YarnClientImpl: Submitted application application_1597742243565_0003

2020-08-18 05:27:12,876 INFO mapreduce.Job: The url to track the job: http://linuxsource:8088/proxy/application_1597742243565_0003/

2020-08-18 05:27:12,876 INFO mapreduce.Job: Running job: job_1597742243565_0003

2020-08-18 05:27:20,042 INFO mapreduce.Job: Job job_1597742243565_0003 running in uber mode : false

2020-08-18 05:27:20,045 INFO mapreduce.Job: map 0% reduce 0%

2020-08-18 05:27:27,155 INFO mapreduce.Job: map 100% reduce 0%

2020-08-18 05:27:32,189 INFO mapreduce.Job: map 100% reduce 100%

2020-08-18 05:27:32,216 INFO mapreduce.Job: Job job_1597742243565_0003 completed successfully

2020-08-18 05:27:32,345 INFO mapreduce.Job: Counters: 54

File System Counters

FILE: Number of bytes read=50

FILE: Number of bytes written=679350

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=544

HDFS: Number of bytes written=215

HDFS: Number of read operations=13

HDFS: Number of large read operations=0

HDFS: Number of write operations=3

HDFS: Number of bytes read erasure-coded=0

Job Counters

Launched map tasks=2

Launched reduce tasks=1

Data-local map tasks=2

Total time spent by all maps in occupied slots (ms)=8358

Total time spent by all reduces in occupied slots (ms)=2530

Total time spent by all map tasks (ms)=8358

Total time spent by all reduce tasks (ms)=2530

Total vcore-milliseconds taken by all map tasks=8358

Total vcore-milliseconds taken by all reduce tasks=2530

Total megabyte-milliseconds taken by all map tasks=8558592

Total megabyte-milliseconds taken by all reduce tasks=2590720

Map-Reduce Framework

Map input records=2

Map output records=4

Map output bytes=36

Map output materialized bytes=56

Input split bytes=308

Combine input records=0

Combine output records=0

Reduce input groups=2

Reduce shuffle bytes=56

Reduce input records=4

Reduce output records=0

Spilled Records=8

Shuffled Maps =2

Failed Shuffles=0

Merged Map outputs=2

GC time elapsed (ms)=234

CPU time spent (ms)=1500

Physical memory (bytes) snapshot=819863552

Virtual memory (bytes) snapshot=8361353216

Total committed heap usage (bytes)=622329856

Peak Map Physical memory (bytes)=310808576

Peak Map Virtual memory (bytes)=2787803136

Peak Reduce Physical memory (bytes)=203501568

Peak Reduce Virtual memory (bytes)=2787303424

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=236

File Output Format Counters

Bytes Written=97

Job Finished in 20.601 seconds

2020-08-18 05:27:32,422 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

Estimated value of Pi is 3.60000000000000000000

[delphix@linuxsource logs]$

hadoop fs -mkdir /user/delphix/books

hadoop fs -put /tmp/pg5000.txt /user/delphix/books

hadoop fs -put /tmp/pg1661.txt /user/delphix/books

hadoop fs -put /tmp/pg135.txt /user/delphix/books

[delphix@linuxsource logs]$ hadoop fs -ls /user/delphix/books

2020-08-18 05:20:23,814 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Found 3 items

-rw-r--r-- 1 delphix supergroup 3322651 2020-08-18 05:20 /user/delphix/books /pg135.txt

-rw-r--r-- 1 delphix supergroup 594933 2020-08-18 05: /user/delphix/books /pg1661.txt

-rw-r--r-- 1 delphix supergroup 1423803 2020-08-18 05:19 /user/delphix/books /pg5000.txt

[delphix@linuxsource logs]$

[delphix@linuxsource logs]$ hadoop jar /u02/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.2.1.jar wordcount books output

[delphix@linuxsource logs]$ hadoop jar /u02/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.2.1.jar wordcount books output

2020-08-18 05:23:36,894 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2020-08-18 05:23:37,676 INFO client.RMProxy: Connecting to ResourceManager at /0.0.0.0:8032

2020-08-18 05:23:38,468 INFO mapreduce.JobResourceUploader: Disabling Erasure Coding for path: /tmp/hadoop-yarn/staging/delphix/.staging/job_1597742243565_0002

2020-08-18 05:23:38,574 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

2020-08-18 05:23:38,720 INFO input.FileInputFormat: Total input files to process : 3

2020-08-18 05:23:38,758 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

2020-08-18 05:23:39,189 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

2020-08-18 05:23:39,607 INFO mapreduce.JobSubmitter: number of splits:3

2020-08-18 05:23:39,763 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

2020-08-18 05:23:39,811 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1597742243565_0002

2020-08-18 05:23:39,811 INFO mapreduce.JobSubmitter: Executing with tokens: []

2020-08-18 05:23:40,197 INFO conf.Configuration: resource-types.xml not found

2020-08-18 05:23:40,198 INFO resource.ResourceUtils: Unable to find 'resource-types.xml'.

2020-08-18 05:23:40,601 INFO impl.YarnClientImpl: Submitted application application_1597742243565_0002

2020-08-18 05:23:40,856 INFO mapreduce.Job: The url to track the job: http://linuxsource:8088/proxy/application_1597742243565_0002/

2020-08-18 05:23:40,857 INFO mapreduce.Job: Running job: job_1597742243565_0002

2020-08-18 05:23:52,081 INFO mapreduce.Job: Job job_1597742243565_0002 running in uber mode : false

2020-08-18 05:23:52,083 INFO mapreduce.Job: map 0% reduce 0%

2020-08-18 05:24:06,288 INFO mapreduce.Job: map 33% reduce 0%

2020-08-18 05:24:08,301 INFO mapreduce.Job: map 100% reduce 0%

2020-08-18 05:24:12,323 INFO mapreduce.Job: map 100% reduce 100%

2020-08-18 05:24:13,341 INFO mapreduce.Job: Job job_1597742243565_0002 completed successfully

2020-08-18 05:24:13,447 INFO mapreduce.Job: Counters: 55

File System Counters

FILE: Number of bytes read=1490561

FILE: Number of bytes written=3885503

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

HDFS: Number of bytes read=5341752

HDFS: Number of bytes written=886472

HDFS: Number of read operations=14

HDFS: Number of large read operations=0

HDFS: Number of write operations=2

HDFS: Number of bytes read erasure-coded=0

Job Counters

Killed map tasks=1

Launched map tasks=4

Launched reduce tasks=1

Data-local map tasks=4

Total time spent by all maps in occupied slots (ms)=39885

Total time spent by all reduces in occupied slots (ms)=3043

Total time spent by all map tasks (ms)=39885

Total time spent by all reduce tasks (ms)=3043

Total vcore-milliseconds taken by all map tasks=39885

Total vcore-milliseconds taken by all reduce tasks=3043

Total megabyte-milliseconds taken by all map tasks=40842240

Total megabyte-milliseconds taken by all reduce tasks=3116032

Map-Reduce Framework

Map input records=113284

Map output records=927416

Map output bytes=8898193

Map output materialized bytes=1490573

Input split bytes=365

Combine input records=927416

Combine output records=101835

Reduce input groups=80709

Reduce shuffle bytes=1490573

Reduce input records=101835

Reduce output records=80709

Spilled Records=203670

Shuffled Maps =3

Failed Shuffles=0

Merged Map outputs=3

GC time elapsed (ms)=1335

CPU time spent (ms)=10640

Physical memory (bytes) snapshot=1168629760

Virtual memory (bytes) snapshot=11191828480

Total committed heap usage (bytes)=907542528

Peak Map Physical memory (bytes)=323743744

Peak Map Virtual memory (bytes)=2801008640

Peak Reduce Physical memory (bytes)=203014144

Peak Reduce Virtual memory (bytes)=2792550400

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=5341387

File Output Format Counters

Bytes Written=886472

[delphix@linuxsource logs]$

[delphix@linuxsource ~]$ hadoop fs -ls /user/delphix/output

2020-10-15 04:16:11,111 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Found 2 items

-rw-r--r-- 1 delphix supergroup 0 2020-08-18 05:24 /user/delphix/output/_SUCCESS

-rw-r--r-- 1 delphix supergroup 886472 2020-08-18 05:24 /user/delphix/output/part-r-00000

[delphix@linuxsource ~]$ hadoop fs -cat /user/delphix/output/part-r-00000

2020-10-15 04:20:57,285 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2020-10-15 04:20:58,376 INFO sasl.SaslDataTransferClient: SASL encryption trust check: localHostTrusted = false, remoteHostTrusted = false

" 27

"'A 1

"'About 1

"'Absolute 1

"'Ah!' 2

"'Ah, 2

"'Ample.' 1

"'And 10

"'Arthur!' 1

"'As 1

"'At 1

"'Because 1

"'Breckinridge, 1

"'But 1

"'But, 1

"'But,' 1

"'Certainly 2

"'Certainly,' 1

"'Come! 1

"'Come, 1

"'DEAR 1

"'Dear 2

"'Dearest 1

"'Death,' 1

"'December 1

"'Do 3

"'Don't 1

"'Entirely.' 1

"'Flock'; 1

"'For 1

"'Fritz! 1

"'From 1

"'Gone 1

"'Hampshire. 1

"'Have 2

"'Here 1

"'How 2

"'I 23

"'If 2

"'In 2

"'Is 3

"'It 7

"'It's 1

"'Jephro,' 1

"'Keep 1

"'Ku 1

"'L'homme 1

"'Look 2

"'Lord 1

"'MY 2

"'Mariage 1

"'May 1

"'Monsieur 2

"'Most 1

"'Mr. 2

"'My 4

"'Never 1

"'Never,' 1

...

Now that my Hadoop cluster is working fine, I will replicate the same installation on my linuxtarget environment.

Notice, that I can also virtualize the HADOOP_HOME (but I will go for a separate installation)

This concluded the first part of the study, so stay tuned for the next part ...

![HOW DELPHIX HELPS ANSWER COMPLIANCE REGELATORY [Part-I]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEgs8fiyxWTbh7XbbB9cz8Fg6e7jUj2bgZofl4X29Lz4ykzIKGDHm9vzBjxRq-SO7pYdzBshpbPjaM0nfHLXIsJlRa3_bOde6estlgoYGXVrVE4xcqApZlaAzUjP0_k6XPqurd9nrJXRZfxX/s72-c/pic1.png)

No comments:

Post a Comment