Before reading this post I encourage you to take a look at the previous parts :

First part : where I setup my HADOOP test lab (single hadoop node)

Second part : I demonstrated how to link HADOOP source with Delphix as an APPDATA (aka. Vfile) leveraging FUSE library.

This is the last part of "Delphix meets HADOOP" trilogy study, where I will be demonstrating how one can bring Delphix features like (storage footprint reduction, virtual copies, time travel, PIT copies, masking ...) to HADOOP.

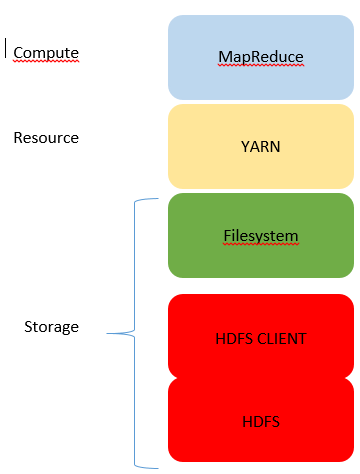

The following schema represents the basics architecture of a Hadoop node or set of nodes, where HDFS abstracts the physical storage architecture from all data nodes, in order to manipulate the distributed file system as if it were a single hard drive.

During this part, I will be demonstrating two configuration options:

OPTION I : FULL INTEGRATION

It's called so, because HADOOP will interact directly with Delphix NFS disks as backend storage to supports all operations defined by Hadoop FileSystem interface (as represented in the schema below).

For this, I will be installing on the target server a new tool "NetApp Hadoop NFS Connector" freely available on Github https://github.com/NetApp/NetApp-Hadoop-NFS-Connector.

Download the connector from GitHub, and copy hadoop-nfs-connector-1.0.6.jar file to HADOOP shared common library directory.

Don't forget to download other dependencies if needed as specified on Github.

Create the NFS connector configuration file defined above.

Add delphix user and group json configuration files.

Start hadoop to make it use NFS as its backend storage.

Testing time ...

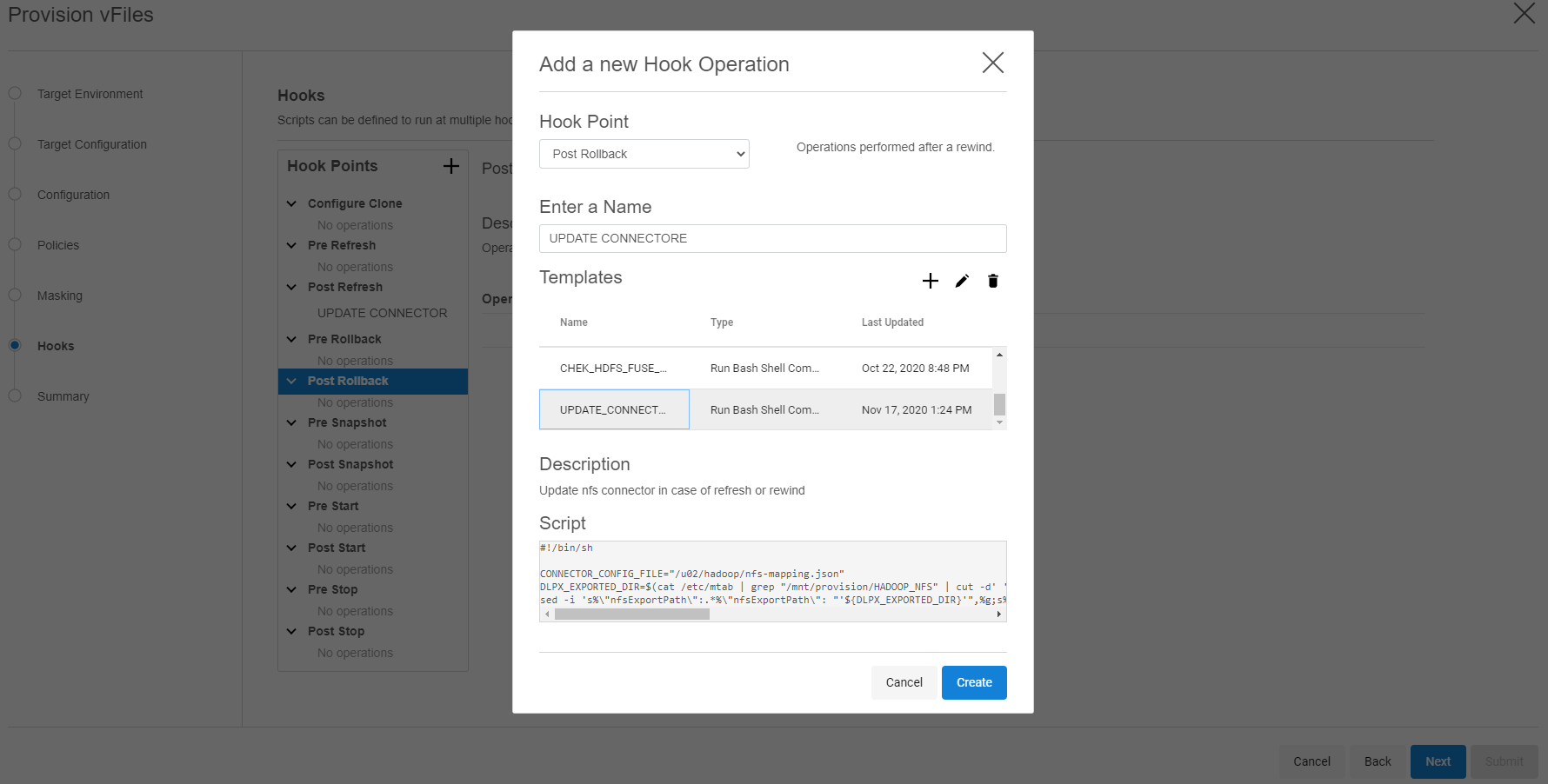

First, let's create a hook that takes care of updating the connector configuration file,, each time we refresh or rewind the vfile as delphix changes the exported directory name.

It's time to validate the configuration by running some hadoop commands and mapreduce jobs on the vfile mount point.

Interesting! as per the output we verified that HADOOP used the Vfile (NFS) to list disk content that is a virtual copy of our production.

The question now is, can it be used too to run some mapreduce jobs ?

Awesome, the mapreduce job computed successfully from the (NFS) virual copy and this directory.

Recover the file to its previous start.

Notice: timeflow directory has changed and we bring back our "msa" directory. Behind the scean the hook took care of updating the connector configuration file, to make it point to the right (active) snapshot version on delphix engine.

Try the same and sync (refresh) with the production to keep up with new changes (notice the new directory inputMapReduce, its content will be used to compute a mapreduce job).

To complete the test let's mask the username field in submissions.csv file.

[delphix@linuxtarget lib]$ pwd

/u02/hadoop/share/hadoop/common/lib

[delphix@linuxtarget hadoop]$ cd share/hadoop/common/lib

[delphix@linuxtarget lib]$ ll hadoop-nfs-connector-1.0.6.jar

-rw-r--r-- 1 delphix oinstall 105603 Nov 12 09:31 hadoop-nfs-connector-1.0.6.jar

I will just demonstrate hdfs and MapReduce, even the connector supports all HADOOP interfaces (YARN, SCOOP, MAPREDUCE, HBASE ...).

Edit and update HADOOP resource configuration file.

[delphix@linuxtarget hadoop]$ cat core-site.xml

<?xml version="1.0" encoding="UTF-8"?>

<?xml-stylesheet type="text/xsl" href="configuration.xsl"?>

<!--

Licensed under the Apache License, Version 2.0 (the "License");

you may not use this file except in compliance with the License.

You may obtain a copy of the License at

http://www.apache.org/licenses/LICENSE-2.0

Unless required by applicable law or agreed to in writing, software

distributed under the License is distributed on an "AS IS" BASIS,

WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

See the License for the specific language governing permissions and

limitations under the License. See accompanying LICENSE file.

-->

<!-- Put site-specific property overrides in this file. -->

<configuration>

<property>

<!-- NFS defined as primary/default filesystem -->

<name>fs.defaultFS</name>

<!-- Set delphix engine nfs uri -->

<value>nfs://192.168.247.130:2049</value>

</property>

<property>

<name>fs.nfs.configuration</name>

<!-- Define the path of connector config file -->

<value>/u02/hadoop/nfs-mapping.json</value>

</property>

<property>

<!-- Add nfs specific. libs -->

<name>fs.nfs.impl</name>

<value>org.apache.hadoop.fs.nfs.NFSv3FileSystem</value>

</property>

<property>

<name>fs.AbstractFileSystem.nfs.impl</name>

<value>org.apache.hadoop.fs.nfs.NFSv3AbstractFilesystem</value>

</property>

<property>

<name>fs.nfs.prefetch</name>

<value>true</value>

</property>

</configuration>

[delphix@linuxtarget hadoop]$

[delphix@linuxtarget hadoop]$ cat /u02/hadoop/nfs-mapping.json

{

"spaces": [

{

//Configuration name

"name": "delphix_engine",

//Define delphix engine nfs uri

"uri": "nfs://192.168.247.130:2049/",

"options": {

//Define the exported FS for the current version of snapshot from delphix engine

"nfsExportPath": "/domain0/group-16/appdata_container-50/appdata_timeflow-134/datafile",

"nfsReadSizeBits": 20,

"nfsWriteSizeBits": 20,

"nfsSplitSizeBits": 28,

"nfsAuthScheme": "AUTH_SYS",

"nfsPort": 2049,

//Define hadoop username, group, id and gid

"nfsUserConfigFile":"/u02/hadoop/nfs-usr.json",

"nfsGroupConfigFile":"/u02/hadoop/nfs-grp.json",

"nfsMountPort": -1,

"nfsRpcbindPort": 111

},

"endpoints": [

{

//Define delphix engine nfs uri

"host": "nfs://192.168.247.130:2049/",

//Define the exported FS for the current version of snapshot from delphix engine

"nfsExportPath": "/domain0/group-16/appdata_container-50/appdata_timeflow-134/datafile",

//Target Vfile mount point

"path": "/mnt/provision/HADOOP_NFS"

}

]

}

]

}

[delphix@linuxtarget hadoop]$

[delphix@linuxtarget hadoop]$ cat /u02/hadoop/nfs-usr.json

{

"usernames":[

{

"userName":"delphix",

"userID":"501"

}

]

}

[delphix@linuxtarget hadoop]$ cat "/u02/hadoop/nfs-grp.json"

{

"groupnames":[

{

"groupName":"oinstall",

"groupID":"500"

}

]

}

[delphix@linuxtarget hadoop]$

Start hadoop to make it use NFS as its backend storage.

[delphix@linuxtarget hadoop]$ start-dfs.sh; start-yarn.sh

Starting namenodes on [linuxtarget.delphix.local]

Starting datanodes

Starting secondary namenodes [linuxtarget.delphix.local]

2020-11-18 10:41:40,939 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

Starting resourcemanager

Starting nodemanagers

[delphix@linuxtarget hadoop]$ jps

8628 NodeManager

9015 Jps

1818 Bootstrap

8495 ResourceManager

[delphix@linuxtarget hadoop]$

Testing time ...

First, let's create a hook that takes care of updating the connector configuration file,, each time we refresh or rewind the vfile as delphix changes the exported directory name.

Add the hook previously created to update the connector configuration in post-rewind and post-refresh options.

It's time to validate the configuration by running some hadoop commands and mapreduce jobs on the vfile mount point.

[delphix@linuxtarget hadoop]$ tree /mnt/provision/HADOOP_NFS

/mnt/provision/HADOOP_NFS

├── msa

│ ├── pg135.txt

│ ├── pg1661.txt

│ └── pg5000.txt

├── tmp

│ └── hadoop-yarn

│ └── staging

│ ├── delphix

│ └── history

│ └── done_intermediate

│ └── delphix

│ ├── job_1597742243565_0002-1597742620305-delphix-word+count-1597742650887-3-1-SUCCEEDED-default-1597742630491.jhist

│ ├── job_1597742243565_0002_conf.xml

│ ├── job_1597742243565_0002.summary

│ ├── job_1597742243565_0003-1597742832787-delphix-QuasiMonteCarlo-1597742850486-2-1-SUCCEEDED-default-1597742838692.jhist

│ ├── job_1597742243565_0003_conf.xml

│ └── job_1597742243565_0003.summary

└── user

└── delphix

├── 1777

│ └── delphix

│ ├── job_1605608715662_0001-1605608768080-delphix-QuasiMonteCarlo-1605608804125-2-1-SUCCEEDED-default-1605608776146.jhist

│ ├── job_1605608715662_0001_conf.xml

│ ├── job_1605608715662_0001.summary

│ ├── job_1605624127021_0001-1605624167009-delphix-word+count-1605624260869-3-1-SUCCEEDED-default-1605624175112.jhist

│ ├── job_1605624127021_0001_conf.xml

│ └── job_1605624127021_0001.summary

├── books

│ ├── pg135.txt

│ ├── pg1661.txt

│ └── pg5000.txt

├── output

│ ├── part-r-00000

│ └── _SUCCESS

├── output2

│ └── _temporary

│ └── 1

├── output3

│ ├── part-r-00000

│ └── _SUCCESS

└── QuasiMonteCarlo_1605608650898_611566920

└── in

├── part0

└── part1

20 directories, 24 files

[delphix@linuxtarget hadoop]$

[delphix@linuxtarget hadoop]$ /u02/hadoop/bin/hadoop fs -ls /

2020-11-18 11:47:34,970 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2020-11-18 11:47:35,460 INFO nfs.NFSv3FileSystem: User config file: /u02/hadoop/nfs-usr.json

2020-11-18 11:47:35,460 INFO nfs.NFSv3FileSystem: Group config file: /u02/hadoop/nfs-grp.json

Store with ep Endpoint: host=nfs://192.168.247.130:2049/ export=/domain0/group-16/appdata_container-50/appdata_timeflow-134/datafile path=/ has fsId 6383044330812034134

Found 3 items

drwxr-xr-x - delphix oinstall 5 2020-08-18 08:40 /msa

drwx------ - delphix oinstall 3 2020-08-18 05:21 /tmp

drwxr-xr-x - delphix oinstall 3 2020-08-18 05:23 /user

[delphix@linuxtarget hadoop]$ /u02/hadoop/bin/hadoop fs -ls /msa*

2020-11-18 11:49:44,428 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2020-11-18 11:49:44,831 INFO nfs.NFSv3FileSystem: User config file: /u02/hadoop/nfs-usr.json

2020-11-18 11:49:44,831 INFO nfs.NFSv3FileSystem: Group config file: /u02/hadoop/nfs-grp.json

Store with ep Endpoint: host=nfs://192.168.247.130:2049/ export=/domain0/group-16/appdata_container-50/appdata_timeflow-134/datafile path=/ has fsId 6383044330812034134

Found 3 items

-rw-r--r-- 1 delphix oinstall 3322651 2020-08-18 08:40 /msa/pg135.txt

-rw-r--r-- 1 delphix oinstall 594933 2020-08-18 08:40 /msa/pg1661.txt

-rw-r--r-- 1 delphix oinstall 1423803 2020-08-18 08:40 /msa/pg5000.txt

[delphix@linuxtarget hadoop]$ /u02/hadoop/bin/hadoop fs -ls /user/delphix

2020-11-18 11:49:59,200 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2020-11-18 11:49:59,751 INFO nfs.NFSv3FileSystem: User config file: /u02/hadoop/nfs-usr.json

2020-11-18 11:49:59,751 INFO nfs.NFSv3FileSystem: Group config file: /u02/hadoop/nfs-grp.json

Store with ep Endpoint: host=nfs://192.168.247.130:2049/ export=/domain0/group-16/appdata_container-50/appdata_timeflow-134/datafile path=/ has fsId 6383044330812034134

Found 6 items

drwxrwxrwt - delphix oinstall 3 2020-11-17 07:26 /user/delphix/1777

drwxrwxrwx - delphix oinstall 3 2020-11-17 07:24 /user/delphix/QuasiMonteCarlo_1605608650898_611566920

drwxr-xr-x - delphix oinstall 5 2020-08-18 05:23 /user/delphix/books

drwxr-xr-x - delphix oinstall 4 2020-08-18 05:24 /user/delphix/output

drwxrwxrwx - delphix oinstall 3 2020-11-17 07:27 /user/delphix/output2

drwxrwxrwx - delphix oinstall 4 2020-11-17 11:44 /user/delphix/output3

[delphix@linuxtarget hadoop]$ /u02/hadoop/bin/hadoop fs -ls /tmp

2020-11-18 11:50:16,673 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2020-11-18 11:50:17,106 INFO nfs.NFSv3FileSystem: User config file: /u02/hadoop/nfs-usr.json

2020-11-18 11:50:17,106 INFO nfs.NFSv3FileSystem: Group config file: /u02/hadoop/nfs-grp.json

Store with ep Endpoint: host=nfs://192.168.247.130:2049/ export=/domain0/group-16/appdata_container-50/appdata_timeflow-134/datafile path=/ has fsId 6383044330812034134

Found 1 items

drwx------ - delphix oinstall 3 2020-08-18 05:21 /tmp/hadoop-yarn

[delphix@linuxtarget hadoop]$

Interesting! as per the output we verified that HADOOP used the Vfile (NFS) to list disk content that is a virtual copy of our production.

The question now is, can it be used too to run some mapreduce jobs ?

[delphix@linuxtarget hadoop]$ /u02/hadoop/bin/hadoop jar /u02/hadoop/share/hadoop/mapreduce/hadoop-mapreduce-examples-3.2.1.jar pi 2 1

Number of Maps = 2

Samples per Map = 1

2020-11-18 12:18:05,614 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2020-11-18 12:18:05,988 INFO nfs.NFSv3FileSystem: User config file: /u02/hadoop/nfs-usr.json

2020-11-18 12:18:05,988 INFO nfs.NFSv3FileSystem: Group config file: /u02/hadoop/nfs-grp.json

Store with ep Endpoint: host=nfs://192.168.247.130:2049/ export=/domain0/group-16/appdata_container-50/appdata_timeflow-134/datafile path=/ has fsId 6383044330812034134

2020-11-18 12:18:06,470 WARN stream.NFSBufferedOutputStream: Flushing a closed stream. Check your code.

2020-11-18 12:18:06,475 INFO stream.NFSBufferedOutputStream: STREAMSTATSstreamStatistics:

STREAMSTATS name: class org.apache.hadoop.fs.nfs.stream.NFSBufferedInputStream/user/delphix/QuasiMonteCarlo_1605719885286_1765607225/in/part0

STREAMSTATS streamID: 1

STREAMSTATS ====OutputStream Statistics====

STREAMSTATS BytesWritten: 102

STREAMSTATS writeOps: 5

STREAMSTATS timeWritten: 0.001 s

STREAMSTATS ====NFS Write Statistics====

STREAMSTATS BytesNFSWritten: 212

STREAMSTATS NFSWriteOps: 2

STREAMSTATS timeNFSWritten: 0.008 s

STREAMSTATS ====Bandwidth====

STREAMSTATS Write: 0.097 MB/s

STREAMSTATS NFSWrite: 0.025 MB/s

STREAMSTATS ====Average Latency====

STREAMSTATS Write: 0.200 ms

STREAMSTATS NFSWrite: 4.000 ms

2020-11-18 12:18:06,478 INFO stream.NFSBufferedOutputStream: OutputStream shutdown took 8 ms

Wrote input for Map #0

2020-11-18 12:18:06,510 WARN stream.NFSBufferedOutputStream: Flushing a closed stream. Check your code.

2020-11-18 12:18:06,518 INFO stream.NFSBufferedOutputStream: STREAMSTATSstreamStatistics:

STREAMSTATS name: class org.apache.hadoop.fs.nfs.stream.NFSBufferedInputStream/user/delphix/QuasiMonteCarlo_1605719885286_1765607225/in/part1

STREAMSTATS streamID: 2

STREAMSTATS ====OutputStream Statistics====

STREAMSTATS BytesWritten: 102

STREAMSTATS writeOps: 5

STREAMSTATS timeWritten: 0.0 s

STREAMSTATS ====NFS Write Statistics====

STREAMSTATS BytesNFSWritten: 212

STREAMSTATS NFSWriteOps: 2

STREAMSTATS timeNFSWritten: 0.004 s

STREAMSTATS ====Bandwidth====

STREAMSTATS Write: Infinity MB/s

STREAMSTATS NFSWrite: 0.051 MB/s

STREAMSTATS ====Average Latency====

STREAMSTATS Write: 0.000 ms

STREAMSTATS NFSWrite: 2.000 ms

2020-11-18 12:18:06,518 INFO stream.NFSBufferedOutputStream: OutputStream shutdown took 8 ms

Wrote input for Map #1

Starting Job

2020-11-18 12:18:06,693 INFO client.RMProxy: Connecting to ResourceManager at /192.168.247.134:8032

2020-11-18 12:18:07,219 INFO nfs.NFSv3FileSystem: User config file: /u02/hadoop/nfs-usr.json

2020-11-18 12:18:07,219 INFO nfs.NFSv3FileSystem: Group config file: /u02/hadoop/nfs-grp.json

2020-11-18 12:18:07,654 WARN stream.NFSBufferedOutputStream: Flushing a closed stream. Check your code.

2020-11-18 12:18:07,684 INFO stream.NFSBufferedOutputStream: STREAMSTATSstreamStatistics:

STREAMSTATS name: class org.apache.hadoop.fs.nfs.stream.NFSBufferedInputStream/tmp/hadoop-yarn/staging/delphix/.staging/job_1605719636745_0001/job.jar

STREAMSTATS streamID: 3

STREAMSTATS ====OutputStream Statistics====

STREAMSTATS BytesWritten: 316534

STREAMSTATS writeOps: 78

STREAMSTATS timeWritten: 0.0 s

STREAMSTATS ====NFS Write Statistics====

STREAMSTATS BytesNFSWritten: 316534

STREAMSTATS NFSWriteOps: 1

STREAMSTATS timeNFSWritten: 0.027 s

STREAMSTATS ====Bandwidth====

STREAMSTATS Write: Infinity MB/s

STREAMSTATS NFSWrite: 11.180 MB/s

STREAMSTATS ====Average Latency====

STREAMSTATS Write: 0.000 ms

STREAMSTATS NFSWrite: 27.000 ms

2020-11-18 12:18:07,685 INFO stream.NFSBufferedOutputStream: OutputStream shutdown took 31 ms

2020-11-18 12:18:07,792 INFO input.FileInputFormat: Total input files to process : 2

2020-11-18 12:18:07,832 WARN stream.NFSBufferedOutputStream: Flushing a closed stream. Check your code.

2020-11-18 12:18:07,841 INFO stream.NFSBufferedOutputStream: STREAMSTATSstreamStatistics:

STREAMSTATS name: class org.apache.hadoop.fs.nfs.stream.NFSBufferedInputStream/tmp/hadoop-yarn/staging/delphix/.staging/job_1605719636745_0001/job.split

STREAMSTATS streamID: 4

STREAMSTATS ====OutputStream Statistics====

STREAMSTATS BytesWritten: 307

STREAMSTATS writeOps: 9

STREAMSTATS timeWritten: 0.0 s

STREAMSTATS ====NFS Write Statistics====

STREAMSTATS BytesNFSWritten: 315

STREAMSTATS NFSWriteOps: 1

STREAMSTATS timeNFSWritten: 0.002 s

STREAMSTATS ====Bandwidth====

STREAMSTATS Write: Infinity MB/s

STREAMSTATS NFSWrite: 0.150 MB/s

STREAMSTATS ====Average Latency====

STREAMSTATS Write: 0.000 ms

STREAMSTATS NFSWrite: 2.000 ms

2020-11-18 12:18:07,842 INFO stream.NFSBufferedOutputStream: OutputStream shutdown took 9 ms

2020-11-18 12:18:07,875 WARN stream.NFSBufferedOutputStream: Flushing a closed stream. Check your code.

2020-11-18 12:18:07,878 INFO stream.NFSBufferedOutputStream: STREAMSTATSstreamStatistics:

STREAMSTATS name: class org.apache.hadoop.fs.nfs.stream.NFSBufferedInputStream/tmp/hadoop-yarn/staging/delphix/.staging/job_1605719636745_0001/job.splitmetainfo

STREAMSTATS streamID: 5

STREAMSTATS ====OutputStream Statistics====

STREAMSTATS BytesWritten: 38

STREAMSTATS writeOps: 3

STREAMSTATS timeWritten: 0.0 s

STREAMSTATS ====NFS Write Statistics====

STREAMSTATS BytesNFSWritten: 49

STREAMSTATS NFSWriteOps: 1

STREAMSTATS timeNFSWritten: 0.002 s

STREAMSTATS ====Bandwidth====

STREAMSTATS Write: Infinity MB/s

STREAMSTATS NFSWrite: 0.023 MB/s

STREAMSTATS ====Average Latency====

STREAMSTATS Write: 0.000 ms

STREAMSTATS NFSWrite: 2.000 ms

2020-11-18 12:18:07,879 INFO stream.NFSBufferedOutputStream: OutputStream shutdown took 4 ms

2020-11-18 12:18:07,879 INFO mapreduce.JobSubmitter: number of splits:2

2020-11-18 12:18:08,285 WARN stream.NFSBufferedOutputStream: Flushing a closed stream. Check your code.

2020-11-18 12:18:08,295 INFO stream.NFSBufferedOutputStream: STREAMSTATSstreamStatistics:

STREAMSTATS name: class org.apache.hadoop.fs.nfs.stream.NFSBufferedInputStream/tmp/hadoop-yarn/staging/delphix/.staging/job_1605719636745_0001/job.xml

STREAMSTATS streamID: 6

STREAMSTATS ====OutputStream Statistics====

STREAMSTATS BytesWritten: 193869

STREAMSTATS writeOps: 24

STREAMSTATS timeWritten: 0.0 s

STREAMSTATS ====NFS Write Statistics====

STREAMSTATS BytesNFSWritten: 387738

STREAMSTATS NFSWriteOps: 2

STREAMSTATS timeNFSWritten: 0.012 s

STREAMSTATS ====Bandwidth====

STREAMSTATS Write: Infinity MB/s

STREAMSTATS NFSWrite: 30.815 MB/s

STREAMSTATS ====Average Latency====

STREAMSTATS Write: 0.000 ms

STREAMSTATS NFSWrite: 6.000 ms

2020-11-18 12:18:08,296 INFO stream.NFSBufferedOutputStream: OutputStream shutdown took 11 ms

2020-11-18 12:18:08,296 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1605719636745_0001

2020-11-18 12:18:08,298 INFO mapreduce.JobSubmitter: Executing with tokens: []

Store with ep Endpoint: host=nfs://192.168.247.130:2049/ export=/domain0/group-16/appdata_container-50/appdata_timeflow-134/datafile path=/ has fsId 6383044330812034134

2020-11-18 12:18:08,820 INFO nfs.NFSv3FileSystem: User config file: /u02/hadoop/nfs-usr.json

2020-11-18 12:18:08,820 INFO nfs.NFSv3FileSystem: Group config file: /u02/hadoop/nfs-grp.json

2020-11-18 12:18:08,841 INFO nfs.NFSv3FileSystem: User config file: /u02/hadoop/nfs-usr.json

2020-11-18 12:18:08,841 INFO nfs.NFSv3FileSystem: Group config file: /u02/hadoop/nfs-grp.json

Store with ep Endpoint: host=nfs://192.168.247.130:2049/ export=/domain0/group-16/appdata_container-50/appdata_timeflow-134/datafile path=/ has fsId 6383044330812034134

2020-11-18 12:18:09,067 INFO conf.Configuration: resource-types.xml not found

2020-11-18 12:18:09,084 INFO resource.ResourceUtils: Unable to find 'resource-types.xml'.

2020-11-18 12:18:09,758 INFO impl.YarnClientImpl: Submitted application application_1605719636745_0001

2020-11-18 12:18:09,857 INFO mapreduce.Job: The url to track the job: http://linuxtarget:8088/proxy/application_1605719636745_0001/

2020-11-18 12:18:09,858 INFO mapreduce.Job: Running job: job_1605719636745_0001

2020-11-18 12:18:23,318 INFO mapreduce.Job: Job job_1605719636745_0001 running in uber mode : false

2020-11-18 12:18:23,318 INFO mapreduce.Job: map 0% reduce 0%

2020-11-18 12:18:31,459 INFO mapreduce.Job: Task Id : attempt_1605719636745_0001_m_000001_0, Status : FAILED

[2020-11-18 12:18:30.079]Exception from container-launch.

Container id: container_1605719636745_0001_01_000003

Exit code: 1

[2020-11-18 12:18:30.126]Container exited with a non-zero exit code 1. Error file: prelaunch.err.

Last 4096 bytes of prelaunch.err :

Last 4096 bytes of stderr :

OpenJDK 64-Bit Server VM warning: INFO: os::commit_memory(0x00000000ef900000, 71303168, 0) failed; error='Cannot allocate memory' (errno=12)

[2020-11-18 12:18:30.127]Container exited with a non-zero exit code 1. Error file: prelaunch.err.

Last 4096 bytes of prelaunch.err :

Last 4096 bytes of stderr :

OpenJDK 64-Bit Server VM warning: INFO: os::commit_memory(0x00000000ef900000, 71303168, 0) failed; error='Cannot allocate memory' (errno=12)

2020-11-18 12:18:32,524 INFO mapreduce.Job: map 50% reduce 0%

2020-11-18 12:19:25,436 INFO mapreduce.Job: map 100% reduce 0%

2020-11-18 12:19:27,456 INFO mapreduce.Job: map 100% reduce 100%

2020-11-18 12:19:27,494 INFO mapreduce.Job: Job job_1605719636745_0001 completed successfully

2020-11-18 12:19:27,729 INFO mapreduce.Job: Counters: 56

File System Counters

FILE: Number of bytes read=50

FILE: Number of bytes written=682197

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

NFS: Number of bytes read=0

NFS: Number of bytes written=215

NFS: Number of read operations=0

NFS: Number of large read operations=0

NFS: Number of write operations=0

Job Counters

Failed map tasks=2

Failed reduce tasks=1

Launched map tasks=4

Launched reduce tasks=2

Other local map tasks=2

Rack-local map tasks=2

Total time spent by all maps in occupied slots (ms)=119102

Total time spent by all reduces in occupied slots (ms)=57284

Total time spent by all map tasks (ms)=59551

Total time spent by all reduce tasks (ms)=28642

Total vcore-milliseconds taken by all map tasks=59551

Total vcore-milliseconds taken by all reduce tasks=28642

Total megabyte-milliseconds taken by all map tasks=121960448

Total megabyte-milliseconds taken by all reduce tasks=58658816

Map-Reduce Framework

Map input records=2

Map output records=4

Map output bytes=36

Map output materialized bytes=56

Input split bytes=308

Combine input records=0

Combine output records=0

Reduce input groups=2

Reduce shuffle bytes=56

Reduce input records=4

Reduce output records=0

Spilled Records=8

Shuffled Maps =2

Failed Shuffles=0

Merged Map outputs=2

GC time elapsed (ms)=241

CPU time spent (ms)=2800

Physical memory (bytes) snapshot=636350464

Virtual memory (bytes) snapshot=9067249664

Total committed heap usage (bytes)=513277952

Peak Map Physical memory (bytes)=255586304

Peak Map Virtual memory (bytes)=3020283904

Peak Reduce Physical memory (bytes)=145166336

Peak Reduce Virtual memory (bytes)=3027767296

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=97

Job Finished in 81.214 seconds

2020-11-18 12:19:27,802 INFO stream.NFSBufferedInputStream: Changing prefetchBlockLimit to 0

2020-11-18 12:19:27,829 INFO stream.NFSBufferedInputStream: STREAMSTATSstreamStatistics:

STREAMSTATS name: class org.apache.hadoop.fs.nfs.stream.NFSBufferedInputStream/user/delphix/QuasiMonteCarlo_1605719885286_1765607225/out/reduce-out

STREAMSTATS streamID: 1

STREAMSTATS ====InputStream Statistics====

STREAMSTATS BytesRead: 118

STREAMSTATS readOps: 1

STREAMSTATS timeRead: 0.024 s

STREAMSTATS ====NFS Read Statistics====

STREAMSTATS BytesNFSRead: 118

STREAMSTATS NFSReadOps: 1

STREAMSTATS timeNFSRead: 0.01 s

STREAMSTATS ====Bandwidth====

STREAMSTATS Read: 0.005 MB/s

STREAMSTATS NFSRead: 0.011 MB/s

STREAMSTATS ====Average Latency====

STREAMSTATS Read: 24.000 ms

STREAMSTATS NFSRead: 10.000 ms

Estimated value of Pi is 4.00000000000000000000

[delphix@linuxtarget hadoop]$

[delphix@linuxtarget hadoop]$ /u02/hadoop/bin/hadoop fs -ls /user/delphix/output3

2020-11-17 09:50:47,935 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2020-11-17 09:50:48,303 INFO nfs.NFSv3FileSystem: User config file: /u02/hadoop/nfs-usr.json

2020-11-17 09:50:48,304 INFO nfs.NFSv3FileSystem: Group config file: /u02/hadoop/nfs-grp.json

Store with ep Endpoint: host=nfs://192.168.247.130:2049/ export=/domain0/group-16/appdata_container-50/appdata_timeflow-134/datafile path=/ has fsId 6383044330812034134

Found 2 items

-rw-r--r-- 1 delphix oinstall 0 2020-11-17 11:44 /user/delphix/output3/_SUCCESS

-rw-r--r-- 1 delphix oinstall 886472 2020-11-17 11:44 /user/delphix/output3/part-r-00000

[delphix@linuxtarget hadoop]$ /u02/hadoop/bin/hadoop fs -cat /user/delphix/output3/part-r-00000

2020-11-17 09:51:27,747 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2020-11-17 09:51:28,058 INFO nfs.NFSv3FileSystem: User config file: /u02/hadoop/nfs-usr.json

2020-11-17 09:51:28,058 INFO nfs.NFSv3FileSystem: Group config file: /u02/hadoop/nfs-grp.json

Store with ep Endpoint: host=nfs://192.168.247.130:2049/ export=/domain0/group-16/appdata_container-50/appdata_timeflow-134/datafile path=/ has fsId 6383044330812034134

2020-11-17 09:51:28,258 INFO stream.NFSBufferedInputStream: Changing prefetchBlockLimit to 0" 27

"'A 1

"'About 1

"'Absolute 1

"'Ah!' 2

"'Ah, 2

"'Ample.' 1

"'And 10

"'Arthur!' 1

"'As 1

"'At 1

"'Because 1

"'Breckinridge, 1

"'But 1

"'But, 1

"'But,' 1

"'Certainly 2

"'Certainly,' 1

"'Come! 1

"'Come, 1

"'DEAR 1

"'Dear 2

"'Dearest 1

"'Death,' 1

"'December 1

1

"Yonder, 1

"You 340

"You!" 1

"You'll 5

"You're 9

"You've 2

"You, 2

"You? 1

"You?" 5

"Young 3

"Your 40

Armenia_ 1

Armenian 3

Armenians 1

Armitage, 1

Armitage--Percy 1

Armitage--the 1

Armour 1

Arms 2

Armuyr. 1

Army 3

Army. 1

Arnaldo)_"_, 1

Arnauld 1

Arnault 1

Arnay-Le-Duc, 1

Arno 28

Arno, 3

Arno. 2

Arno.] 1

Arno; 1

Arnoul 1

Arnould 1

Arnsworth 1

Arona 1

Arostogeiton 1

Arouet 1

Around 3

Arras 11

Arras, 7

Arras. 8

Arras." 3

Arras; 4

Arras? 2

Arras?" 2

Arrigo 3

Arrigucci,-- 1

Arrival 2

Arrive 1

Arrived 1

Arsenal 4

Arsenal, 3

Arsenal. 1

Arsenal." 1

Arsenal; 1

Art 5

Art, 1

Artaxerxes' 1

Arte 1

Artevelde 1

Artevelde, 1

Arthamis, 1

Arthur 13

Arthur's 2

Arthur's. 1

Arthur, 4

Arthur. 2

Arthurs 1

Arthurs, 1

Arti. 2

Article 2

Artillery-men 2

Artillery. 1

Artist, 1

Artistic 1

Artists) 1

Artists. 1

Artonge 1

Arts 1

Arts, 2

Arts.' 1

Arts_ 2

Arts_, 2

Arve, 1

As 467

...

As, 2

Ascend-with-Regret,"[36] 1

Ascension 2

Ascension, 1

Ascoli 2

Ash 3

Ash-Wednesday; 1

Ash. 3

Ashamed 1

Ashburnham 2

Ashburnham, 2

Asia 21

Asia. 1

Asia?' 1

Asiatic 3

| 36

àpieza; 1

è 4

è: 1

ècrit_ 1

2020-11-17 09:55:44,542 INFO stream.NFSBufferedInputStream: STREAMSTATSstreamStatistics:

STREAMSTATS name: class org.apache.hadoop.fs.nfs.stream.NFSBufferedInputStream/user/delphix/output3/part-r-00000

STREAMSTATS streamID: 1

STREAMSTATS ====InputStream Statistics====

STREAMSTATS BytesRead: 886472

STREAMSTATS readOps: 217

STREAMSTATS timeRead: 0.021 s

STREAMSTATS ====NFS Read Statistics====

STREAMSTATS BytesNFSRead: 886472

STREAMSTATS NFSReadOps: 1

STREAMSTATS timeNFSRead: 0.015 s

STREAMSTATS ====Bandwidth====

STREAMSTATS Read: 40.257 MB/s

STREAMSTATS NFSRead: 56.360 MB/s

STREAMSTATS ====Average Latency====

STREAMSTATS Read: 0.097 ms

STREAMSTATS NFSRead: 15.000 ms

Awesome, the mapreduce job computed successfully from the (NFS) virual copy and this directory.

Let's simulate a destructive test (notice the time-flow version directory in bold), to experience time travel capability.

[delphix@linuxtarget ~]$ df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/vg_linuxsource-lv_root

19G 16G 2.9G 85% /

tmpfs 2.0G 0 2.0G 0% /dev/shm

/dev/sda1 477M 110M 342M 25% /boot

/dev/sdb 30G 21G 7.9G 72% /u01

/dev/sdc 20G 7.7G 11G 41% /u02

192.168.247.130:/domain0/group-16/appdata_container-50/appdata_timeflow-134/datafile

25G 6.0M 25G 1% /mnt/provision/HADOOP_NFS

[delphix@linuxtarget ~]$

[delphix@linuxtarget ~]$ /u02/hadoop/bin/hadoop fs -ls /

2020-11-19 10:29:25,234 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2020-11-19 10:29:25,539 INFO nfs.NFSv3FileSystem: User config file: /u02/hadoop/nfs-usr.json

2020-11-19 10:29:25,539 INFO nfs.NFSv3FileSystem: Group config file: /u02/hadoop/nfs-grp.json

Store with ep Endpoint: host=nfs://192.168.247.130:2049/ export=/domain0/group-16/appdata_container-50/appdata_timeflow-134/datafile path=/ has fsId 6383044330812034134

Found 3 items

drwxr-xr-x - delphix oinstall 5 2020-08-18 08:40 /msa

drwx------ - delphix oinstall 3 2020-08-18 05:21 /tmp

drwxr-xr-x - delphix oinstall 3 2020-08-18 05:23 /user

[delphix@linuxtarget ~]$ /u02/hadoop/bin/hadoop fs -rm -r /msa

2020-11-19 10:29:34,585 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2020-11-19 10:29:34,873 INFO nfs.NFSv3FileSystem: User config file: /u02/hadoop/nfs-usr.json

2020-11-19 10:29:34,873 INFO nfs.NFSv3FileSystem: Group config file: /u02/hadoop/nfs-grp.json

Store with ep Endpoint: host=nfs://192.168.247.130:2049/ export=/domain0/group-16/appdata_container-50/appdata_timeflow-134/datafile path=/ has fsId 6383044330812034134

2020-11-19 10:29:35,084 INFO Configuration.deprecation: io.bytes.per.checksum is deprecated. Instead, use dfs.bytes-per-checksum

Deleted /msa

[delphix@linuxtarget ~]$ /u02/hadoop/bin/hadoop fs -ls /

2020-11-19 10:29:40,235 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2020-11-19 10:29:40,570 INFO nfs.NFSv3FileSystem: User config file: /u02/hadoop/nfs-usr.json

2020-11-19 10:29:40,570 INFO nfs.NFSv3FileSystem: Group config file: /u02/hadoop/nfs-grp.json

Store with ep Endpoint: host=nfs://192.168.247.130:2049/ export=/domain0/group-16/appdata_container-50/appdata_timeflow-134/datafile path=/ has fsId 6383044330812034134

Found 2 items

drwx------ - delphix oinstall 3 2020-08-18 05:21 /tmp

drwxr-xr-x - delphix oinstall 3 2020-08-18 05:23 /user

Recover the file to its previous start.

Notice: timeflow directory has changed and we bring back our "msa" directory. Behind the scean the hook took care of updating the connector configuration file, to make it point to the right (active) snapshot version on delphix engine.

[delphix@linuxtarget ~]$ df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/vg_linuxsource-lv_root

19G 16G 2.9G 85% /

tmpfs 2.0G 0 2.0G 0% /dev/shm

/dev/sda1 477M 110M 342M 25% /boot

/dev/sdb 30G 21G 7.9G 72% /u01

/dev/sdc 20G 7.7G 11G 41% /u02

192.168.247.130:/domain0/group-16/appdata_container-50/appdata_timeflow-136/datafile

25G 7.0M 25G 1% /mnt/provision/HADOOP_NFS

[delphix@linuxtarget ~]$ /u02/hadoop/bin/hadoop fs -ls /

2020-11-19 10:40:23,505 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2020-11-19 10:40:24,017 INFO nfs.NFSv3FileSystem: User config file: /u02/hadoop/nfs-usr.json

2020-11-19 10:40:24,017 INFO nfs.NFSv3FileSystem: Group config file: /u02/hadoop/nfs-grp.json

Store with ep Endpoint: host=nfs://192.168.247.130:2049/ export=/domain0/group-16/appdata_container-50/appdata_timeflow-136/datafile path=/ has fsId -6212462795412190200

Found 3 items

drwxr-xr-x - delphix oinstall 5 2020-08-18 08:40 /msa

drwx------ - delphix oinstall 3 2020-08-18 05:21 /tmp

drwxr-xr-x - delphix oinstall 3 2020-08-18 05:23 /user

[delphix@linuxtarget ~]$

[delphix@linuxtarget MapReduceTutorial]$ ll /mnt/provision/HADOOP_NFS/

total 2

drwxr-xr-x 2 delphix oinstall 3 Nov 19 11:36 inputMapReduce

drwxrwxrwx 2 delphix oinstall 5 Aug 18 08:40 msa

drwxrwxrwx 3 delphix oinstall 3 Aug 18 05:21 tmp

drwxrwxrwx 3 delphix oinstall 3 Aug 18 05:23 user

[delphix@linuxtarget MapReduceTutorial]$

[delphix@linuxtarget MapReduceTutorial]$HADOOP_HOME/bin/hdfs dfs -copyFromLocal submissions.csv /inputMapReduce

[delphix@linuxtarget MapReduceTutorial]$

[delphix@linuxtarget MapReduceTutorial]$ /u02/hadoop/bin/hadoop jar /u02/hadoop/share/hadoop/tools/lib/hadoop-streaming-3.2.1.jar -input /inputMapReduce/submissions.csv -output /mapred-output -mapper "python /u02/MapReduceTutorial/map.py" -reducer "python /u02/MapReduceTutorial/reduce.py"

2020-11-20 08:40:05,359 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2020-11-20 08:40:05,660 INFO nfs.NFSv3FileSystem: User config file: /u02/hadoop/nfs-usr.json

2020-11-20 08:40:05,660 INFO nfs.NFSv3FileSystem: Group config file: /u02/hadoop/nfs-grp.json

packageJobJar: [] [/u02/hadoop/share/hadoop/tools/lib/hadoop-streaming-3.2.1.jar] /tmp/streamjob3486654915753636437.jar tmpDir=null

2020-11-20 08:40:06,063 INFO client.RMProxy: Connecting to ResourceManager at /192.168.247.134:8032

2020-11-20 08:40:06,434 INFO nfs.NFSv3FileSystem: User config file: /u02/hadoop/nfs-usr.json

2020-11-20 08:40:06,434 INFO nfs.NFSv3FileSystem: Group config file: /u02/hadoop/nfs-grp.json

2020-11-20 08:40:06,460 INFO client.RMProxy: Connecting to ResourceManager at /192.168.247.134:8032

2020-11-20 08:40:06,465 INFO nfs.NFSv3FileSystem: User config file: /u02/hadoop/nfs-usr.json

2020-11-20 08:40:06,465 INFO nfs.NFSv3FileSystem: Group config file: /u02/hadoop/nfs-grp.json

Store with ep Endpoint: host=nfs://192.168.247.130:2049/ export=/domain0/group-16/appdata_container-50/appdata_timeflow-145/datafile path=/ has fsId 4902151703787705342

2020-11-20 08:40:07,677 WARN stream.NFSBufferedOutputStream: Flushing a closed stream. Check your code.

2020-11-20 08:40:07,695 INFO stream.NFSBufferedOutputStream: STREAMSTATSstreamStatistics:

STREAMSTATS name: class org.apache.hadoop.fs.nfs.stream.NFSBufferedInputStream/tmp/hadoop-yarn/staging/delphix/.staging/job_1605879592523_0001/job.jar

STREAMSTATS streamID: 1

STREAMSTATS ====OutputStream Statistics====

STREAMSTATS BytesWritten: 176820

STREAMSTATS writeOps: 44

STREAMSTATS timeWritten: 0.001 s

STREAMSTATS ====NFS Write Statistics====

STREAMSTATS BytesNFSWritten: 176820

STREAMSTATS NFSWriteOps: 1

STREAMSTATS timeNFSWritten: 0.015 s

STREAMSTATS ====Bandwidth====

STREAMSTATS Write: 168.629 MB/s

STREAMSTATS NFSWrite: 11.242 MB/s

STREAMSTATS ====Average Latency====

STREAMSTATS Write: 0.023 ms

STREAMSTATS NFSWrite: 15.000 ms

2020-11-20 08:40:07,697 INFO stream.NFSBufferedOutputStream: OutputStream shutdown took 20 ms

2020-11-20 08:40:07,769 INFO mapred.FileInputFormat: Total input files to process : 1

2020-11-20 08:40:07,812 WARN stream.NFSBufferedOutputStream: Flushing a closed stream. Check your code.

2020-11-20 08:40:07,816 INFO stream.NFSBufferedOutputStream: STREAMSTATSstreamStatistics:

STREAMSTATS name: class org.apache.hadoop.fs.nfs.stream.NFSBufferedInputStream/tmp/hadoop-yarn/staging/delphix/.staging/job_1605879592523_0001/job.split

STREAMSTATS streamID: 2

STREAMSTATS ====OutputStream Statistics====

STREAMSTATS BytesWritten: 217

STREAMSTATS writeOps: 9

STREAMSTATS timeWritten: 0.0 s

STREAMSTATS ====NFS Write Statistics====

STREAMSTATS BytesNFSWritten: 225

STREAMSTATS NFSWriteOps: 1

STREAMSTATS timeNFSWritten: 0.002 s

STREAMSTATS ====Bandwidth====

STREAMSTATS Write: Infinity MB/s

STREAMSTATS NFSWrite: 0.107 MB/s

STREAMSTATS ====Average Latency====

STREAMSTATS Write: 0.000 ms

STREAMSTATS NFSWrite: 2.000 ms

2020-11-20 08:40:07,817 INFO stream.NFSBufferedOutputStream: OutputStream shutdown took 5 ms

2020-11-20 08:40:07,845 WARN stream.NFSBufferedOutputStream: Flushing a closed stream. Check your code.

2020-11-20 08:40:07,851 INFO stream.NFSBufferedOutputStream: STREAMSTATSstreamStatistics:

STREAMSTATS name: class org.apache.hadoop.fs.nfs.stream.NFSBufferedInputStream/tmp/hadoop-yarn/staging/delphix/.staging/job_1605879592523_0001/job.splitmetainfo

STREAMSTATS streamID: 3

STREAMSTATS ====OutputStream Statistics====

STREAMSTATS BytesWritten: 38

STREAMSTATS writeOps: 3

STREAMSTATS timeWritten: 0.0 s

STREAMSTATS ====NFS Write Statistics====

STREAMSTATS BytesNFSWritten: 54

STREAMSTATS NFSWriteOps: 1

STREAMSTATS timeNFSWritten: 0.002 s

STREAMSTATS ====Bandwidth====

STREAMSTATS Write: Infinity MB/s

STREAMSTATS NFSWrite: 0.026 MB/s

STREAMSTATS ====Average Latency====

STREAMSTATS Write: 0.000 ms

STREAMSTATS NFSWrite: 2.000 ms

2020-11-20 08:40:07,851 INFO stream.NFSBufferedOutputStream: OutputStream shutdown took 6 ms

2020-11-20 08:40:07,852 INFO mapreduce.JobSubmitter: number of splits:2

2020-11-20 08:40:08,380 WARN stream.NFSBufferedOutputStream: Flushing a closed stream. Check your code.

2020-11-20 08:40:08,393 INFO stream.NFSBufferedOutputStream: STREAMSTATSstreamStatistics:

STREAMSTATS name: class org.apache.hadoop.fs.nfs.stream.NFSBufferedInputStream/tmp/hadoop-yarn/staging/delphix/.staging/job_1605879592523_0001/job.xml

STREAMSTATS streamID: 4

STREAMSTATS ====OutputStream Statistics====

STREAMSTATS BytesWritten: 195416

STREAMSTATS writeOps: 24

STREAMSTATS timeWritten: 0.0 s

STREAMSTATS ====NFS Write Statistics====

STREAMSTATS BytesNFSWritten: 390832

STREAMSTATS NFSWriteOps: 2

STREAMSTATS timeNFSWritten: 0.026 s

STREAMSTATS ====Bandwidth====

STREAMSTATS Write: Infinity MB/s

STREAMSTATS NFSWrite: 14.336 MB/s

STREAMSTATS ====Average Latency====

STREAMSTATS Write: 0.000 ms

STREAMSTATS NFSWrite: 13.000 ms

2020-11-20 08:40:08,394 INFO stream.NFSBufferedOutputStream: OutputStream shutdown took 14 ms

2020-11-20 08:40:08,394 INFO mapreduce.JobSubmitter: Submitting tokens for job: job_1605879592523_0001

2020-11-20 08:40:08,395 INFO mapreduce.JobSubmitter: Executing with tokens: []

Store with ep Endpoint: host=nfs://192.168.247.130:2049/ export=/domain0/group-16/appdata_container-50/appdata_timeflow-145/datafile path=/ has fsId 4902151703787705342

2020-11-20 08:40:08,694 INFO nfs.NFSv3FileSystem: User config file: /u02/hadoop/nfs-usr.json

2020-11-20 08:40:08,694 INFO nfs.NFSv3FileSystem: Group config file: /u02/hadoop/nfs-grp.json

2020-11-20 08:40:08,698 INFO nfs.NFSv3FileSystem: User config file: /u02/hadoop/nfs-usr.json

2020-11-20 08:40:08,698 INFO nfs.NFSv3FileSystem: Group config file: /u02/hadoop/nfs-grp.json

Store with ep Endpoint: host=nfs://192.168.247.130:2049/ export=/domain0/group-16/appdata_container-50/appdata_timeflow-145/datafile path=/ has fsId 4902151703787705342

2020-11-20 08:40:08,983 INFO conf.Configuration: resource-types.xml not found

2020-11-20 08:40:08,983 INFO resource.ResourceUtils: Unable to find 'resource-types.xml'.

2020-11-20 08:40:09,925 INFO impl.YarnClientImpl: Submitted application application_1605879592523_0001

2020-11-20 08:40:10,010 INFO mapreduce.Job: The url to track the job: http://linuxtarget:8088/proxy/application_1605879592523_0001/

2020-11-20 08:40:10,026 INFO mapreduce.Job: Running job: job_1605879592523_0001

2020-11-20 08:40:20,326 INFO mapreduce.Job: Job job_1605879592523_0001 running in uber mode : false

2020-11-20 08:40:20,327 INFO mapreduce.Job: map 0% reduce 0%

2020-11-20 08:40:34,729 INFO mapreduce.Job: Task Id : attempt_1605879592523_0001_m_000001_0, Status : FAILED

[2020-11-20 08:40:33.166]Container killed on request. Exit code is 137

[2020-11-20 08:40:33.181]Container exited with a non-zero exit code 137.

[2020-11-20 08:40:33.347]Killed by external signal

2020-11-20 08:40:35,778 INFO mapreduce.Job: map 50% reduce 0%

2020-11-20 08:40:43,833 INFO mapreduce.Job: map 100% reduce 0%

2020-11-20 08:40:45,851 INFO mapreduce.Job: map 100% reduce 100%

2020-11-20 08:40:46,867 INFO mapreduce.Job: Job job_1605879592523_0001 completed successfully

2020-11-20 08:40:47,075 INFO mapreduce.Job: Counters: 55

File System Counters

FILE: Number of bytes read=151081

FILE: Number of bytes written=989668

FILE: Number of read operations=0

FILE: Number of large read operations=0

FILE: Number of write operations=0

NFS: Number of bytes read=0

NFS: Number of bytes written=4192

NFS: Number of read operations=0

NFS: Number of large read operations=0

NFS: Number of write operations=0

Job Counters

Failed map tasks=1

Launched map tasks=3

Launched reduce tasks=1

Other local map tasks=1

Rack-local map tasks=2

Total time spent by all maps in occupied slots (ms)=59494

Total time spent by all reduces in occupied slots (ms)=14646

Total time spent by all map tasks (ms)=29747

Total time spent by all reduce tasks (ms)=7323

Total vcore-milliseconds taken by all map tasks=29747

Total vcore-milliseconds taken by all reduce tasks=7323

Total megabyte-milliseconds taken by all map tasks=60921856

Total megabyte-milliseconds taken by all reduce tasks=14997504

Map-Reduce Framework

Map input records=132309

Map output records=13500

Map output bytes=124075

Map output materialized bytes=151087

Input split bytes=218

Combine input records=0

Combine output records=0

Reduce input groups=282

Reduce shuffle bytes=151087

Reduce input records=13500

Reduce output records=282

Spilled Records=27000

Shuffled Maps =2

Failed Shuffles=0

Merged Map outputs=2

GC time elapsed (ms)=1334

CPU time spent (ms)=6100

Physical memory (bytes) snapshot=742555648

Virtual memory (bytes) snapshot=9064607744

Total committed heap usage (bytes)=626524160

Peak Map Physical memory (bytes)=308588544

Peak Map Virtual memory (bytes)=3025346560

Peak Reduce Physical memory (bytes)=142086144

Peak Reduce Virtual memory (bytes)=3020419072

Shuffle Errors

BAD_ID=0

CONNECTION=0

IO_ERROR=0

WRONG_LENGTH=0

WRONG_MAP=0

WRONG_REDUCE=0

File Input Format Counters

Bytes Read=0

File Output Format Counters

Bytes Written=4192

2020-11-20 08:40:47,076 INFO streaming.StreamJob: Output directory: /mapred-output

[delphix@linuxtarget MapReduceTutorial]$ /u02/hadoop/bin/hadoop fs -ls /mapred-output

2020-11-20 08:52:53,844 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2020-11-20 08:52:54,170 INFO nfs.NFSv3FileSystem: User config file: /u02/hadoop/nfs-usr.json

2020-11-20 08:52:54,170 INFO nfs.NFSv3FileSystem: Group config file: /u02/hadoop/nfs-grp.json

Store with ep Endpoint: host=nfs://192.168.247.130:2049/ export=/domain0/group-16/appdata_container-50/appdata_timeflow-145/datafile path=/ has fsId 4902151703787705342

Found 2 items

-rw-r--r-- 1 delphix oinstall 0 2020-11-20 10:40 /mapred-output/_SUCCESS

-rw-r--r-- 1 delphix oinstall 4192 2020-11-20 10:40 /mapred-output/part-00000

[delphix@linuxtarget MapReduceTutorial]$ /u02/hadoop/bin/hadoop fs -cat /mapred-output/part-00000

2020-11-20 08:53:08,923 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

2020-11-20 08:53:09,228 INFO nfs.NFSv3FileSystem: User config file: /u02/hadoop/nfs-usr.json

2020-11-20 08:53:09,228 INFO nfs.NFSv3FileSystem: Group config file: /u02/hadoop/nfs-grp.json

Store with ep Endpoint: host=nfs://192.168.247.130:2049/ export=/domain0/group-16/appdata_container-50/appdata_timeflow-145/datafile path=/ has fsId 4902151703787705342

2020-11-20 08:53:09,431 INFO stream.NFSBufferedInputStream: Changing prefetchBlockLimit to 0

4chan 17108

AbandonedPorn 3132

AdPorn 478

AdrenalinePorn 745

AdviceAnimals 210387

AinsleyHarriott 26

Alex 16

Anarchism 635

AnimalPorn 1148

Anticonsumption 174

AnythingGoesPics 5

ArcherFX 1866

ArchitecturePorn 152

Art 1347

Bad_Cop_No_Donut 56

Borderlands2 271

Braveryjerk 230

Buddhism 411

Chargers 47

Cinemagraphs 327

Cyberpunk 4

DIY 1918

Dallas 121

Demotivational 823

Design 5498

DestructionPorn 671

DrugStashes 43

Drugs 1567

DunderMifflin 349

EarthPorn 38623

EmmaStone 325

EnterShikari 15

Eve 230

ExposurePorn 67

Fallout 943

FancyFollicles 2326

FearMe 91

ForeverAlone 40

Frat 137

FreeKarma 422

Frisson 1142

Fun 6

GetMotivated 7202

GifSound 10029

HIFW 1339

HIMYM 661

HistoryPorn 16992

HumanPorn 594

IASIP 272

INTP 74

Images 871

InfrastructurePorn 153

Justrolledintotheshop 111

KarmaConspiracy 10371

KingOfTheHill 511

LadyBoners 1418

LawSchool 79

LeagueOfMemes 927

Libertarian 3113

LongDistance 132

MMA 800

MURICA 2443

MensRights 532

Military 755

MilitaryPorn 636

Minecraft 1780

MinecraftInspiration 11

MonsterHunter 44

Monstercat 1

Music 554

NoFap 656

NotaMethAddict 89

Offensive_Wallpapers 95

OldSchoolCool 2242

PandR 630

Pareidolia 482

PenmanshipPorn 7

PerfectTiming 7306

Pictures 113

Pizza 82

PocketWhales 199

PrettyGirlsUglyFaces 156

Punny 393

Rainmeter 25

RedditDayOf 147

RedditLaqueristas 756

RedditThroughHistory 545

RoomPorn 1061

SkyPorn 455

Slender_Man 991

SquaredCircle 142

StarcraftCirclejerk 59

Stargate 126

Steam 722

StencilTemplates 76

StonerProTips 469

SuicideWatch 338

TalesFromRetail 209

Terraria 24

ThanksObama 363

That70sshow 198

TheLastAirbender 367

TheSimpsons 4924

TheStopGirl 713

TopGear 470

Torchlight 7

TrollXChromosomes 690

TwoXChromosomes 303

VillagePorn 488

WTF 1227869

alternativeart 385

analogygifs 63

animation 8

antibaw 92

arresteddevelopment 3808

atheism 403535

australia 304

aww 593373

awwwtf 1026

baseball 163

batman 4161

battlefield3 210

battlestations 1194

beatles 286

beermoney 2

bicycling 1150

bigbangtheory 326

biology 729

bitchimabus 626

bleach 70

breakingbad 8894

brokengifs 94

calvinandhobbes 1573

canada 1214

canucks 14

cars 150

cats 2391

chemicalreactiongifs 3210

chicago 227

cincinnati 112

classic4chan 1200

comics 21223

community 2336

conspiracy 8266

corgi 1076

creepy 6772

cringe 241

cringepics 4421

cripplingalcoholism 312

darksouls 381

dayz 279

dbz 942

depression 857

diablo3 1417

disney 670

doctorwho 795

dogpictures 1792

drunk 763

dwarffortress 202

facepalm 3473

fffffffuuuuuuuuuuuu 21701

firstworldanarchists 6871

firstworldproblems 148

fitnesscirclejerk 18

forwardsfromgrandma 460

funny 7529130

futurama 6849

gameofthrones 159

gaming 433217

gaybros 525

gaygeek 45

gaymers 790

geek 21629

gentlemanboners 3152

germany 252

ggggg 116

gif 9581

gifs 641628

google 468

guns 474

happy 157

hcfactions 54

hiphopheads 6481

hockey 1157

horror 231

ifiwonthelottery 317

illusionporn 492

iphone 972

itookapicture 1112

katyperry 146

leagueoflegends 1017

led_zeppelin 100

lgbt 156

lol 386

lolcats 2008

lotr 513

magicTCG 309

malehairadvice 92

mathematics 28

mfw 256

mildlyinteresting 32651

misc 92

mlb 190

moosedongs 18

motorcitykitties 7

motorcycles 8

movies 29467

musicgifstation 15

mylittleandysonic1 4

mylittlepony 1774

nasa 106

nba 4160

nope 128

nostalgia 179

nyc 1018

occupywallstreet 57

offbeat 11168

onetruegod 2081

pantheism 27

philadelphia 198

photoshopbattles 3728

pics 3445794

pokemon 13763

politics 14080

pooplikethis 85

proper 1092

ps3bf3 57

radiohead 176

rage 162

ragefaces 18

reactiongifs 92680

reddit.com 126546

rit 92

sandy 7516

sanfrancisco 447

science 18

secretsanta 528

see 1295

sex 2500

shazbot 30

shittyaskscience 23

shittyreactiongifs 295

skateboarding 476

skyrim 6406

sloths 27

southpark 73

space 19185

spaceporn 1762

spongebob 276

sports 2581

starcraft 91

startrek 1167

steampunk 452

steelers 136

sweden 186

synchronizedswimWTF 161

tall 858

technology 4124

teenagers 424

terriblefacebookmemes 254

tf2 207

thewalkingdead 1245

todayilearned 2706

toronto 134

trees 101598

trippy 32

twincitiessocial 27

ukpolitics 294

unitedkingdom 10624

upvote 60

upvotegifs 201

vertical 2043

wallpaper 1365

wallpapers 7154

waterporn 1303

wheredidthesodago 3472

whoselineisitanyway 63

woahdude 15109

wordplay 3

workaholics 417

zelda 475

zombies 1687

2020-11-20 08:53:09,437 INFO stream.NFSBufferedInputStream: STREAMSTATSstreamStatistics:

STREAMSTATS name: class org.apache.hadoop.fs.nfs.stream.NFSBufferedInputStream/mapred-output/part-00000

STREAMSTATS streamID: 1

STREAMSTATS ====InputStream Statistics====

STREAMSTATS BytesRead: 4192

STREAMSTATS readOps: 2

STREAMSTATS timeRead: 0.005 s

STREAMSTATS ====NFS Read Statistics====

STREAMSTATS BytesNFSRead: 4192

STREAMSTATS NFSReadOps: 1

STREAMSTATS timeNFSRead: 0.002 s

STREAMSTATS ====Bandwidth====

STREAMSTATS Read: 0.800 MB/s

STREAMSTATS NFSRead: 1.999 MB/s

STREAMSTATS ====Average Latency====

STREAMSTATS Read: 2.500 ms

STREAMSTATS NFSRead: 2.000 ms

[delphix@linuxtarget MapReduceTutorial]$

Define first the format file, and import it to the masking engine

For test purpose, I will apply FIRST_NAME algorithm to mask the username column.

At this point, just called the job as Vfile hook using the masking API.

And voilà.

Let's compare both files from the source (unmasked) and the one in the target (masked per the job).

#image_id,unixtime,rawtime,title,total_votes,reddit_id,number_of_upvotes,subreddit,number_of_downvotes,localtime,score,number_of_comments,username 0,1333172439,2012-03-31T12:40:39.590113-07:00,And here's a downvote.,63470,rmqjs,32657,funny,30813,1333197639,1844,622,Animates_Everything 0,1333178161,2012-03-31T14:16:01.093638-07:00,Expectation,35,rmun4,29,GifSound,6,1333203361,23,3,Gangsta_Raper 0,1333199913,2012-03-31T20:18:33.192906-07:00,Downvote,41,rna86,32,GifSound,9,1333225113,23,0,Gangsta_Raper 0,1333252330,2012-04-01T10:52:10-07:00,Every time I downvote something,10,ro7e4,6,GifSound,4,1333277530,2,0,Gangsta_Raper 0,1333272954,2012-04-01T16:35:54.393381-07:00,Downvote "Dies Irae",65,rooof,57,GifSound,8,1333298154,49,0,Gangsta_Raper 0,1333761060,2012-04-07T08:11:00-07:00,"Demolished, every time you downvote someone",40,rxwjg,17,gifs,23,1333786260,-6,3,Hellothereawesome 0,1335503834,2012-04-27T12:17:14.103167-07:00,how i feel whenever i submit here,104,svpq7,67,fffffffuuuuuuuuuuuu,37,1335529034,30,12, 0,1339160075,2012-06-08T19:54:35.421944-07:00,getting that first downvote on a new post,13,usmxn,5,funny,8,1339185275,-3,0, 0,1339407879,2012-06-11T16:44:39.947798-07:00,How reddit seems to reacts whenever I share a milestone that I passed,14,uwzrd,6,funny,8,1339433079,-2,0, 0,1339425291,2012-06-11T21:34:51.692933-07:00,Every LastAirBender post with a NSFW tag,20,uxf5q,9,pics,11,1339450491,-2,0,HadManySons 0,1340008115,2012-06-18T15:28:35.800140-07:00,How I felt when i forgot to put "spoiler" in a comment,21,v8vl7,10,gifs,11,1340033315,-1,0,TraumaticASH 0,1340020566,2012-06-18T18:56:06.440319-07:00,What r/AskReddit did to me when I asked something about 9gag.,271,v970d,210,gifs,61,1340045766,149,5,MidgetDance1337 0,1340084902,2012-06-19T12:48:22.539790-07:00,My brother when he found my reddit account.,8494,vah9p,4612,funny,3882,1340110102,730,64,Pazzaz 0,1341036761,2012-06-30T13:12:41.517254-07:00,The reaction i face when i express a view for religion.,23,vuqcr,15,atheism,8,1341061961,7,4, 0,1341408717,2012-07-04T20:31:57.182234-07:00,How I feel on r/atheism,21,w27bp,8,funny,13,1341433917,-5,3,koolkows 0,1341779603,2012-07-09T03:33:23.514877-07:00,When I see a rage comic out of its sub Reddit.,24,w9k12,14,funny,10,1341804803,4,0,Moncole 0,1342782548,2012-07-20T18:09:08.903673-07:00,Sitting as a /new knight of /r/gaming seeing all the insanely twisted shadow planet flash sale posts.,21,wwjak,8,gaming,13,1342807748,-5,4,shortguy014 0,1343172264,2012-07-25T06:24:24.535558-07:00,How I act when I see a "How I feel when.." post in /r/funny,14,x4ogv,6,funny,8,1343197464,-2,0,Killer2000 0,1343626962,2012-07-30T12:42:42.262869-07:00,"Ohhh, that's a lovely picture of your country.",14,xenyw,7,pics,7,1343652162,0,0,todaysuckstomorrow 0,1344874478,2012-08-13T23:14:38-07:00,Pretty much what happened to my pixel art post on /r/minecraft.,1,y6v1k,1,gifs,0,1344899678,1,0, 0,1344923101,2012-08-14T12:45:01-07:00,Anatomy of a downvote,167,y7wwi,97,gifs,70,1344948301,27,6,LazyBurnbaby 0,1347339289,2012-09-11T04:54:49+00:00,Whenever I See a Post Complaining About Downvotes,10,zp04o,6,funny,4,1347339289,2,0, 0,1347771382,2012-09-16T04:56:22+00:00,Seeing the same thing posted multiple times by the same person in 'new',56,zyk4s,39,funny,17,1347771382,22,0,azcomputerguru 0,1347806800,2012-09-16T21:46:40-07:00,"Seeing something you posted yesterday on the front page, posted by someone else",14,100bfe,9,funny,5,1347832000,4,0,IronOxide42 0,1347923765,2012-09-17T23:16:05+00:00,When I remember it's Monday...,3,101rif,1,funny,2,1347923765,-1,0, 0,1348431656,2012-09-23T20:20:56+00:00,Destructive downvote,257,10ctqr,219,GifSound,38,1348431656,181,14,Gangsta_Raper 0,1348800190,2012-09-28T02:43:10+00:00,How I Reddit when drinking bourbon whiskey. Sorry everyone. (gif.),21,10llkg,10,funny,11,1348800190,-1,4,CrowKaneII 1,1347043558,2012-09-07T18:45:58+00:00,This is incredible; note how the faces look before you stare at the center of the image,78,zio2h,60,gifs,18,1347043558,42,12,Hysteriia 1,1347164191,2012-09-09T04:16:31+00:00,What the...,4,zl7eu,2,gif,2,1347164191,0,0,IronicIvan 1,1351825236,2012-11-02T03:00:36+00:00,The faces...,122,12huw6,92,WTF,30,1351825236,62,23,CeruleanThunder 1,1351934419,2012-11-03T09:20:19+00:00,"Definitely one of the weirder GIFs I've come across. Look at the text in the centre, the faces in your peripheral vision will become increasingly fucked up, but the s econd you look directly at the faces they'll become normal.",13121,12k4kx,7452,WTF,5669,1351934419,1783,294,The_Bhuda_Palm 1,1352759302,2012-11-12T22:28:22+00:00,Look in the middle,14,1335av,1,WTF,13,1352759302,-12,3,LOUD_DUCK 100,1358858328,2013-01-22T12:38:48+00:00,Included in the informational packet given to performing artists before a sporting event.,8,171vu0,5,funny,3,1358858328,2,0,Sarbanes_Foxy 100,1358871815,2013-01-22T16:23:35+00:00,how people tend to sing the national anthem at sporting events these days,10,17272u,7,pics,3,1358871815,4,3,awcreative 100,1358873901,2013-01-22T16:58:21+00:00,Star Spangled Banner - Sporting Event Version,5,1729gb,4,funny,1,1358873901,3,0,potato1 100,1358874067,2013-01-22T17:01:07+00:00,How to sing the national anthem at a sporting event.,15104,1729nd,8548,funny,6556,1358874067,1992,352,watcher_of_the_skies 100,1358880415,2013-01-22T18:46:55+00:00,Star Spangled Banner - Sporting Event Edition,13,172htq,8,funny,5,1358880415,3,0,tianan 100,1358882437,2013-01-22T19:20:37+00:00,Star Spangled Banner: Sporting Event Version,19,172ki8,11,funny,8,1358882437,3,2, 100,1358893345,2013-01-22T22:22:25+00:00,Star Spangled Banner - Sporting Event Version,13,172zeg,8,funny,5,1358893345,3,0,Spam4119 100,1358895795,2013-01-22T23:03:15+00:00,How the American national anthem is generally performed in public.,6,1732pc,3,pics,3,1358895795,0,2, 100,1358898147,2013-01-22T23:42:27+00:00,As painfully slow as possible.,12,1735pk,5,funny,7,1358898147,-2,0, 100,1358899057,2013-01-22T23:57:37+00:00,How to sing the Star Spangled Banner,9,1736um,2,funny,7,1358899057,-5,0,beardface909 100,1358950344,2013-01-23T14:12:24+00:00,Sheet Music for the Star Spangled Banner (Sporting Event Version),12,174egv,4,funny,8,1358950344,-4,3,Rockytriton 100,1359024481,2013-01-24T10:48:01+00:00,So I hear Alicia Keys will be opening the Super Bowl...,8,176mtx,2,funny,6,1359024481,-4,2, 100,1359071452,2013-01-24T23:50:52+00:00,In honor of the upcoming super bowl...,17,1780h3,6,funny,11,1359071452,-5,3, 1000,1312325091,2011-08-03T05:44:51-07:00,Joker's Boner,65,j7of5,37,funny,28,1312350291,9,6,MrBlockOfCheese 1000,1352602528,2012-11-11T02:55:28+00:00,When boner meant mistake . . .,15665,12zr9n,8835,funny,6830,1352602528,2005,126,Athejew 1000,1352604659,2012-11-11T10:30:59-07:00,The Joker Gangbangs His Way Into the Hearts of Gotham! [FIXED] (read the fine print),6,130lci,3,funny,3,1352629859,0,0, 1000,1352647211,2012-11-11T15:20:11+00:00,Joker's Boners... [FIXED],10,130f73,3,funny,7,1352647211,-4,0, 1000,1352649045,2012-11-11T15:50:45+00:00,"Wait, what? WTF Joker? That's not ok, man...",17,130gel,5,WTF,12,1352649045,-7,4, 10001,1305876174,2011-05-20T14:22:54-07:00,"Obama Sushi, I feel damaged.",41,hg7mq,31,pics,10,1305901374,21,4,RIAEvangelist 10001,1321442569,2011-11-16T18:22:49-07:00,"i'll have the obama roll, with extra wasabi",60,mf41l,45,pics,15,1321467769,30,6,herpichj 10001,1330303529,2012-02-27T07:45:29-07:00,Google search for "American Sushi",106,q84mz,81,WTF,25,1330328729,56,5,JGDC 10001,1349729096,2012-10-08T20:44:56+00:00,I saw your Hello Kitty Sushi and Raise you OBama Sushi,157,115n39,119,pics,38,1349729096,81,6,charles1er 10001,1349731973,2012-10-08T21:32:53+00:00,Obama Sushi,20,115qnt,11,pics,9,1349731973,2,3,imaznumkay 10001,1350867228,2012-10-22T00:53:48+00:00,Obamaki,12,11vd24,8,pics,4,1350867228,4,0,AMightySandwich #image_id,unixtime,rawtime,title,total_votes,reddit_id,number_of_upvotes,subreddit,number_of_downvotes,localtime,score,number_of_comments,Angle 0,1333172439,2012-03-31T12:40:39.590113-07:00,And here's a downvote.,63470,rmqjs,32657,funny,30813,1333197639,1844,622,Verdie 0,1333178161,2012-03-31T14:16:01.093638-07:00,Expectation,35,rmun4,29,GifSound,6,1333203361,23,3,Delmar 0,1333199913,2012-03-31T20:18:33.192906-07:00,Downvote,41,rna86,32,GifSound,9,1333225113,23,0,Delmar 0,1333252330,2012-04-01T10:52:10-07:00,Every time I downvote something,10,ro7e4,6,GifSound,4,1333277530,2,0,Delmar 0,1333272954,2012-04-01T16:35:54.393381-07:00,Downvote "Dies Irae",65,rooof,57,GifSound,8,1333298154,49,0,Delmar 0,1333761060,2012-04-07T08:11:00-07:00,"Demolished, every time you downvote someone",40,rxwjg,17,gifs,23,1333786260,-6,Lucile 0,1335503834,2012-04-27T12:17:14.103167-07:00,how i feel whenever i submit here,104,svpq7,67,fffffffuuuuuuuuuuuu,37,1335529034,30,12, 0,1339160075,2012-06-08T19:54:35.421944-07:00,getting that first downvote on a new post,13,usmxn,5,funny,8,1339185275,-3,0, 0,1339407879,2012-06-11T16:44:39.947798-07:00,How reddit seems to reacts whenever I share a milestone that I passed,14,uwzrd,6,funny,8,1339433079,-2,0, 0,1339425291,2012-06-11T21:34:51.692933-07:00,Every LastAirBender post with a NSFW tag,20,uxf5q,9,pics,11,1339450491,-2,0,Palmira 0,1340008115,2012-06-18T15:28:35.800140-07:00,How I felt when i forgot to put "spoiler" in a comment,21,v8vl7,10,gifs,11,1340033315,-1,0,Magda 0,1340020566,2012-06-18T18:56:06.440319-07:00,What r/AskReddit did to me when I asked something about 9gag.,271,v970d,210,gifs,61,1340045766,149,5,Forrest 0,1340084902,2012-06-19T12:48:22.539790-07:00,My brother when he found my reddit account.,8494,vah9p,4612,funny,3882,1340110102,730,64,Lachelle 0,1341036761,2012-06-30T13:12:41.517254-07:00,The reaction i face when i express a view for religion.,23,vuqcr,15,atheism,8,1341061961,7,4, 0,1341408717,2012-07-04T20:31:57.182234-07:00,How I feel on r/atheism,21,w27bp,8,funny,13,1341433917,-5,3,Marlin 0,1341779603,2012-07-09T03:33:23.514877-07:00,When I see a rage comic out of its sub Reddit.,24,w9k12,14,funny,10,1341804803,4,0,Beryl 0,1342782548,2012-07-20T18:09:08.903673-07:00,Sitting as a /new knight of /r/gaming seeing all the insanely twisted shadow planet flash sale posts.,21,wwjak,8,gaming,13,1342807748,-5,4,Ma 0,1343172264,2012-07-25T06:24:24.535558-07:00,How I act when I see a "How I feel when.." post in /r/funny,14,x4ogv,6,funny,8,1343197464,-2,0,Pat 0,1343626962,2012-07-30T12:42:42.262869-07:00,"Ohhh, that's a lovely picture of your country.",14,xenyw,7,pics,7,1343652162,0,Magan 0,1344874478,2012-08-13T23:14:38-07:00,Pretty much what happened to my pixel art post on /r/minecraft.,1,y6v1k,1,gifs,0,1344899678,1,0, 0,1344923101,2012-08-14T12:45:01-07:00,Anatomy of a downvote,167,y7wwi,97,gifs,70,1344948301,27,6,Reina 0,1347339289,2012-09-11T04:54:49+00:00,Whenever I See a Post Complaining About Downvotes,10,zp04o,6,funny,4,1347339289,2,0, 0,1347771382,2012-09-16T04:56:22+00:00,Seeing the same thing posted multiple times by the same person in 'new',56,zyk4s,39,funny,17,1347771382,22,0,Marita 0,1347806800,2012-09-16T21:46:40-07:00,"Seeing something you posted yesterday on the front page, posted by someone else",14,100bfe,9,funny,5,1347832000,4,Magan 0,1347923765,2012-09-17T23:16:05+00:00,When I remember it's Monday...,3,101rif,1,funny,2,1347923765,-1,0, 0,1348431656,2012-09-23T20:20:56+00:00,Destructive downvote,257,10ctqr,219,GifSound,38,1348431656,181,14,Delmar 0,1348800190,2012-09-28T02:43:10+00:00,How I Reddit when drinking bourbon whiskey. Sorry everyone. (gif.),21,10llkg,10,funny,11,1348800190,-1,4,Pearline 1,1347043558,2012-09-07T18:45:58+00:00,This is incredible; note how the faces look before you stare at the center of the image,78,zio2h,60,gifs,18,1347043558,42,12,Christin 1,1347164191,2012-09-09T04:16:31+00:00,What the...,4,zl7eu,2,gif,2,1347164191,0,0,Jayna 1,1351825236,2012-11-02T03:00:36+00:00,The faces...,122,12huw6,92,WTF,30,1351825236,62,23,Ismael 1,1351934419,2012-11-03T09:20:19+00:00,"Definitely one of the weirder GIFs I've come across. Look at the text in the centre, the faces in your peripheral vision will become increasingly fucked up, but the s econd you look directly at the faces they'll become normal.",13121,12k4kx,7452,WTF,5669,1351934419,Mathew 1,1352759302,2012-11-12T22:28:22+00:00,Look in the middle,14,1335av,1,WTF,13,1352759302,-12,3,Indira 100,1358858328,2013-01-22T12:38:48+00:00,Included in the informational packet given to performing artists before a sporting event.,8,171vu0,5,funny,3,1358858328,2,0,Stevie 100,1358871815,2013-01-22T16:23:35+00:00,how people tend to sing the national anthem at sporting events these days,10,17272u,7,pics,3,1358871815,4,3,Jeanne 100,1358873901,2013-01-22T16:58:21+00:00,Star Spangled Banner - Sporting Event Version,5,1729gb,4,funny,1,1358873901,3,0,Wendolyn 100,1358874067,2013-01-22T17:01:07+00:00,How to sing the national anthem at a sporting event.,15104,1729nd,8548,funny,6556,1358874067,1992,352,Niki 100,1358880415,2013-01-22T18:46:55+00:00,Star Spangled Banner - Sporting Event Edition,13,172htq,8,funny,5,1358880415,3,0,Chris 100,1358882437,2013-01-22T19:20:37+00:00,Star Spangled Banner: Sporting Event Version,19,172ki8,11,funny,8,1358882437,3,2, 100,1358893345,2013-01-22T22:22:25+00:00,Star Spangled Banner - Sporting Event Version,13,172zeg,8,funny,5,1358893345,3,0,Ernestine 100,1358895795,2013-01-22T23:03:15+00:00,How the American national anthem is generally performed in public.,6,1732pc,3,pics,3,1358895795,0,2, 100,1358898147,2013-01-22T23:42:27+00:00,As painfully slow as possible.,12,1735pk,5,funny,7,1358898147,-2,0, 100,1358899057,2013-01-22T23:57:37+00:00,How to sing the Star Spangled Banner,9,1736um,2,funny,7,1358899057,-5,0,Thanh 100,1358950344,2013-01-23T14:12:24+00:00,Sheet Music for the Star Spangled Banner (Sporting Event Version),12,174egv,4,funny,8,1358950344,-4,3,Kara 100,1359024481,2013-01-24T10:48:01+00:00,So I hear Alicia Keys will be opening the Super Bowl...,8,176mtx,2,funny,6,1359024481,-4,2, 100,1359071452,2013-01-24T23:50:52+00:00,In honor of the upcoming super bowl...,17,1780h3,6,funny,11,1359071452,-5,3, 1000,1312325091,2011-08-03T05:44:51-07:00,Joker's Boner,65,j7of5,37,funny,28,1312350291,9,6,Marissa 1000,1352602528,2012-11-11T02:55:28+00:00,When boner meant mistake . . .,15665,12zr9n,8835,funny,6830,1352602528,2005,126,Boris 1000,1352604659,2012-11-11T10:30:59-07:00,The Joker Gangbangs His Way Into the Hearts of Gotham! [FIXED] (read the fine print),6,130lci,3,funny,3,1352629859,0,0, 1000,1352647211,2012-11-11T15:20:11+00:00,Joker's Boners... [FIXED],10,130f73,3,funny,7,1352647211,-4,0, 1000,1352649045,2012-11-11T15:50:45+00:00,"Wait, what? WTF Joker? That's not ok, man...",17,130gel,5,WTF,12,1352649045,Charlesetta 10001,1305876174,2011-05-20T14:22:54-07:00,"Obama Sushi, I feel damaged.",41,hg7mq,31,pics,10,1305901374,21,Shea 10001,1321442569,2011-11-16T18:22:49-07:00,"i'll have the obama roll, with extra wasabi",60,mf41l,45,pics,15,1321467769,30,Victoria 10001,1330303529,2012-02-27T07:45:29-07:00,Google search for "American Sushi",106,q84mz,81,WTF,25,1330328729,56,5,Kellye 10001,1349729096,2012-10-08T20:44:56+00:00,I saw your Hello Kitty Sushi and Raise you OBama Sushi,157,115n39,119,pics,38,1349729096,81,6,Deedee 10001,1349731973,2012-10-08T21:32:53+00:00,Obama Sushi,20,115qnt,11,pics,9,1349731973,2,3,Iva 10001,1350867228,2012-10-22T00:53:48+00:00,Obamaki,12,11vd24,8,pics,4,1350867228,4,0,Jocelyn

Great, the job applied the defined masking algorithm to csv file. Also, notice that it's possible to mask any supported sources located on the HADOOP Vfile.

This demonstrates how it's possible to bring HADOOP's non-production environments to the next level, by combining Delphix capabilities with NFS CONNECTOR.

Next post, I will be demonstrating the second option "SEMI INTEGRATION"

![HOW DELPHIX HELPS ANSWER COMPLIANCE REGELATORY [Part-I]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEgs8fiyxWTbh7XbbB9cz8Fg6e7jUj2bgZofl4X29Lz4ykzIKGDHm9vzBjxRq-SO7pYdzBshpbPjaM0nfHLXIsJlRa3_bOde6estlgoYGXVrVE4xcqApZlaAzUjP0_k6XPqurd9nrJXRZfxX/s72-c/pic1.png)

No comments:

Post a Comment