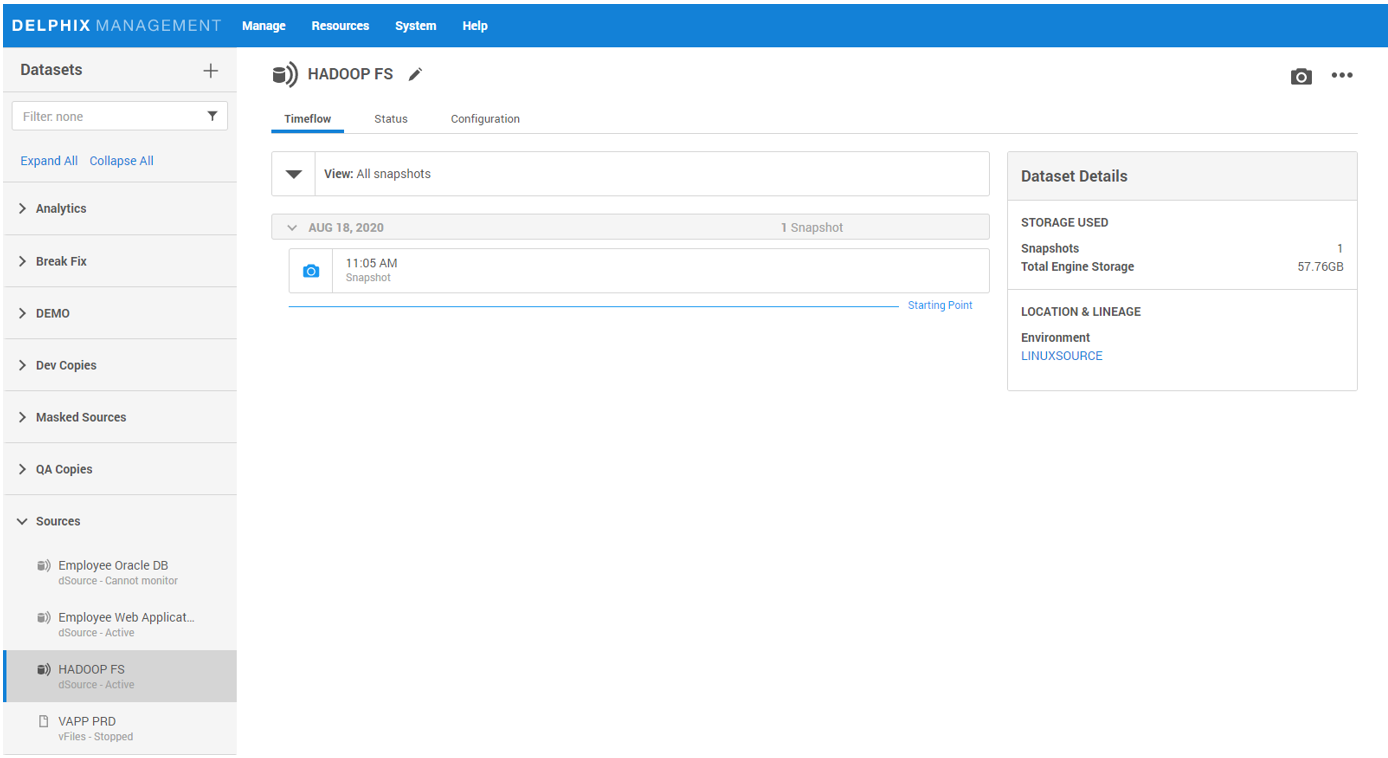

I will demonstrate how one can connect Delphix with HADOOP FS source.

Create a directory and mount the HADOOP FS

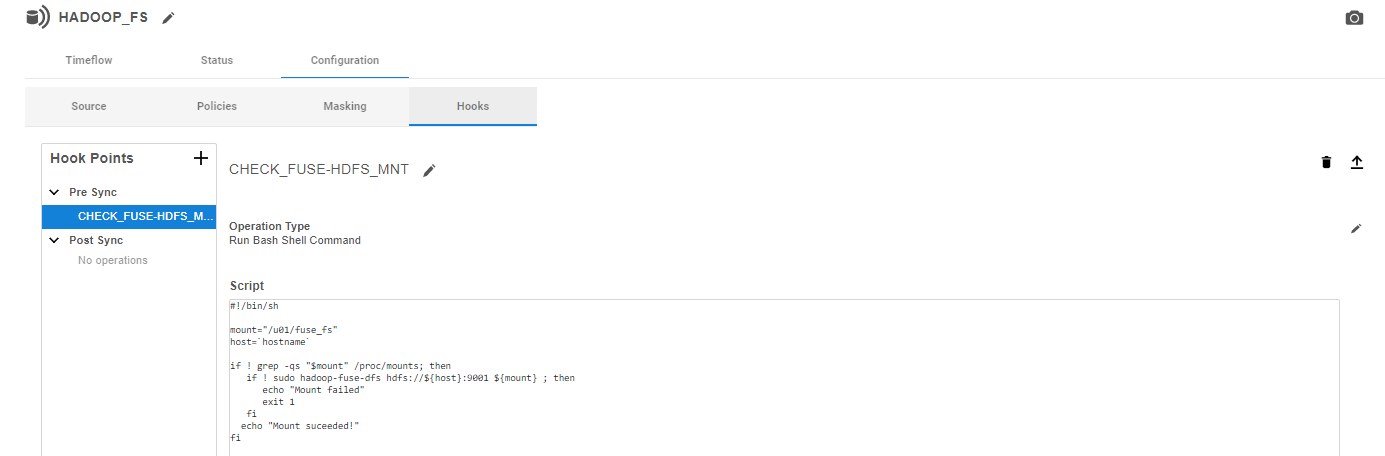

I will add a pre-sync hook to control that the fuse mount is accessible, this will ensure that every taken snapshot is consistent with HADOOP FS content.

During the first article, I have installed and validated a HADOOP single node cluster.

Let us now have some fun and play with (FUSE) library, to get access to HADOOP data disks as a standard Linux filesystem using (the mountable HDFS) technique.

Start with installing fuse

yum install -y hadoop-hdfs-fuse.x86_64

[delphix@linuxsource ~]$ mkdir /u01/fuse_fs

[delphix@linuxsource ~]$ sudo hadoop-fuse-dfs hdfs://192.168.247.133:9001 /u01/fuse_fs

INFO /data/jenkins/workspace/generic-package-rhel64-6-0/topdir/BUILD/hadoop-2.6.0-cdh5.16.2/hadoop-hdfs-project/hadoop-hdfs/src/main/native/fuse-dfs/fuse_options.c:164 Adding FUSE arg /u01/fuse_fs

[delphix@linuxsource ~]$ df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/vg_linuxsource-lv_root

14G 12G 1.7G 88% /

tmpfs 2.0G 0 2.0G 0% /dev/shm

/dev/sda1 477M 110M 342M 25% /boot

/dev/sdb 30G 19G 9.9G 65% /u01

/dev/sdc 20G 4.7G 14G 26% /u02

fuse_dfs 20G 0 20G 0% /u01/fuse_fs

Let's check we can interact with our HADOOP FS as a standard Linux filesystem[delphix@linuxsource ~]$ df -h

Filesystem Size Used Avail Use% Mounted on

/dev/mapper/vg_linuxsource-lv_root

14G 12G 1.7G 88% /

tmpfs 2.0G 0 2.0G 0% /dev/shm

/dev/sda1 477M 110M 342M 25% /boot

/dev/sdb 30G 19G 9.9G 65% /u01

/dev/sdc 20G 4.7G 14G 26% /u02

fuse_dfs 20G 0 20G 0% /u01/fuse_fs

[delphix@linuxsource ~]$ ll /u01/fuse_fs/msa/

total 1

drwxr-xr-x 5 delphix nobody 4096 Aug 18 05:20 books

[delphix@linuxsource ~]$ rm -rf /u01/fuse_fs/msa/books

[delphix@linuxsource ~]$ ll /u01/fuse_fs/msa/

total 0

[delphix@linuxsource u01]$ /u02/hadoop/bin/hadoop fs -ls /msa

2020-08-18 08:38:40,748 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable

[delphix@linuxsource u01]$

Copy some files to the fuse filesystem and check it's content using both standard linux and HADOOP FS commands

[delphix@linuxsource u01]$ cp /tmp/pg*.txt /u01/fuse_fs/msa/books [delphix@linuxsource ~]$ ll /u01/fuse_fs/msa/books -rw-r--r-- 1 delphix supergroup 3322651 2020-08-18 08:40 /msa/pg135.txt -rw-r--r-- 1 delphix supergroup 594933 2020-08-18 08:40 /msa/pg1661.txt -rw-r--r-- 1 delphix supergroup 1423803 2020-08-18 08:40 /msa/pg5000.txt [delphix@linuxsource u01]$ /u02/hadoop/bin/hadoop fs -ls /msa/books 2020-08-18 08:40:55,486 WARN util.NativeCodeLoader: Unable to load native-hadoop library for your platform... using builtin-java classes where applicable Found 3 items -rw-r--r-- 1 delphix supergroup 3322651 2020-08-18 08:40 /msa/books/pg135.txt -rw-r--r-- 1 delphix supergroup 594933 2020-08-18 08:40 /msa/books/pg1661.txt -rw-r--r-- 1 delphix supergroup 1423803 2020-08-18 08:40 /msa/books/pg5000.txtAs we can see (Fuse montable filesystem) allow us to interact with hdfs instances using standard utilities.

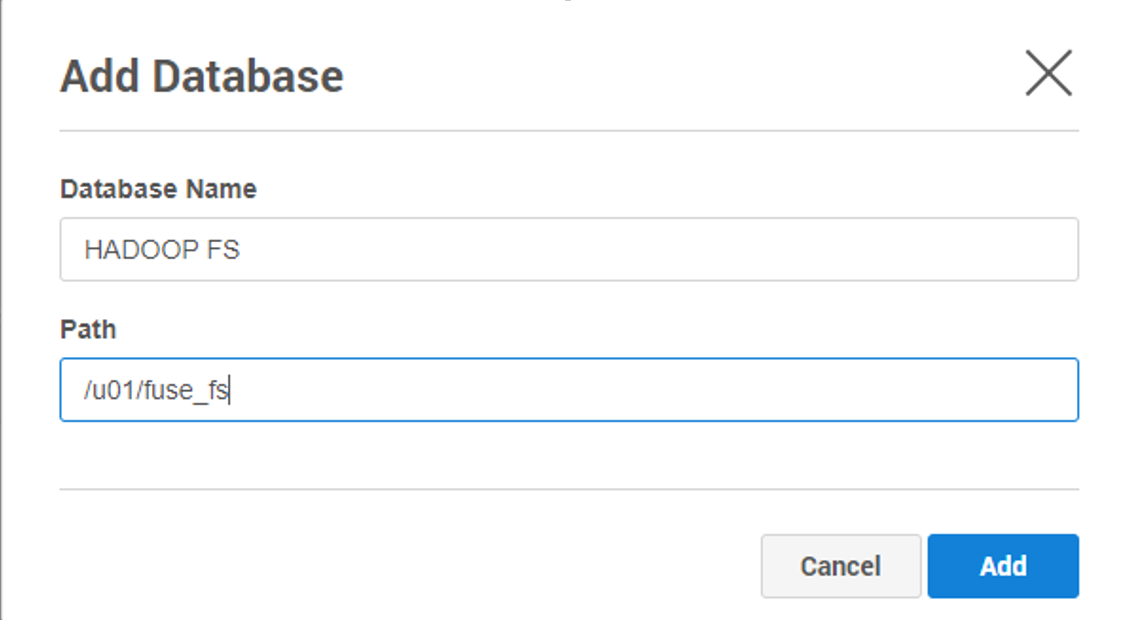

Because Delphix loves APPDATA (aka virtual FS), we will feed it with the FUSE filesystem to link our HADOOP FS instance.

![HOW DELPHIX HELPS ANSWER COMPLIANCE REGELATORY [Part-I]](https://blogger.googleusercontent.com/img/b/R29vZ2xl/AVvXsEgs8fiyxWTbh7XbbB9cz8Fg6e7jUj2bgZofl4X29Lz4ykzIKGDHm9vzBjxRq-SO7pYdzBshpbPjaM0nfHLXIsJlRa3_bOde6estlgoYGXVrVE4xcqApZlaAzUjP0_k6XPqurd9nrJXRZfxX/s72-c/pic1.png)

No comments:

Post a Comment